hi everybody,

is there anybody out there that has one or

more OpenCL capable AMD/ATI GPUs and

would be willing to help testing and tuning?

the latest beta version of mike brown's GPU

package for LAMMPS has now support

for OpenCL on GPUs with the same feature

set as for CUDA acceleration, so it is no

longer limited to Nvidia GPUs.

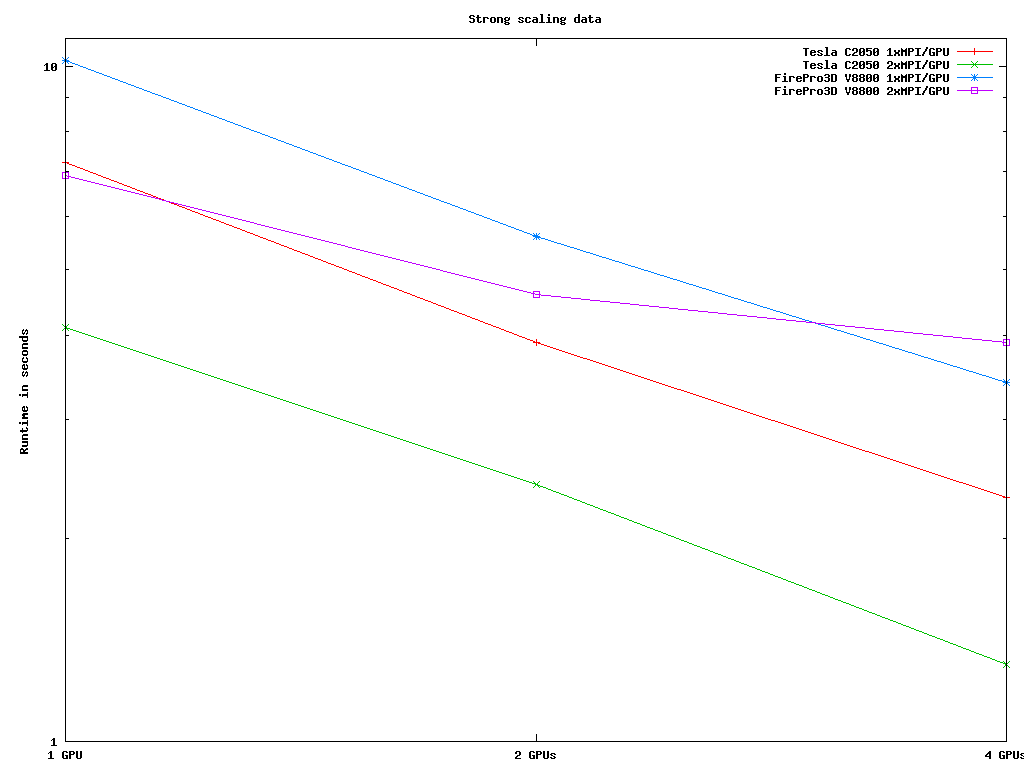

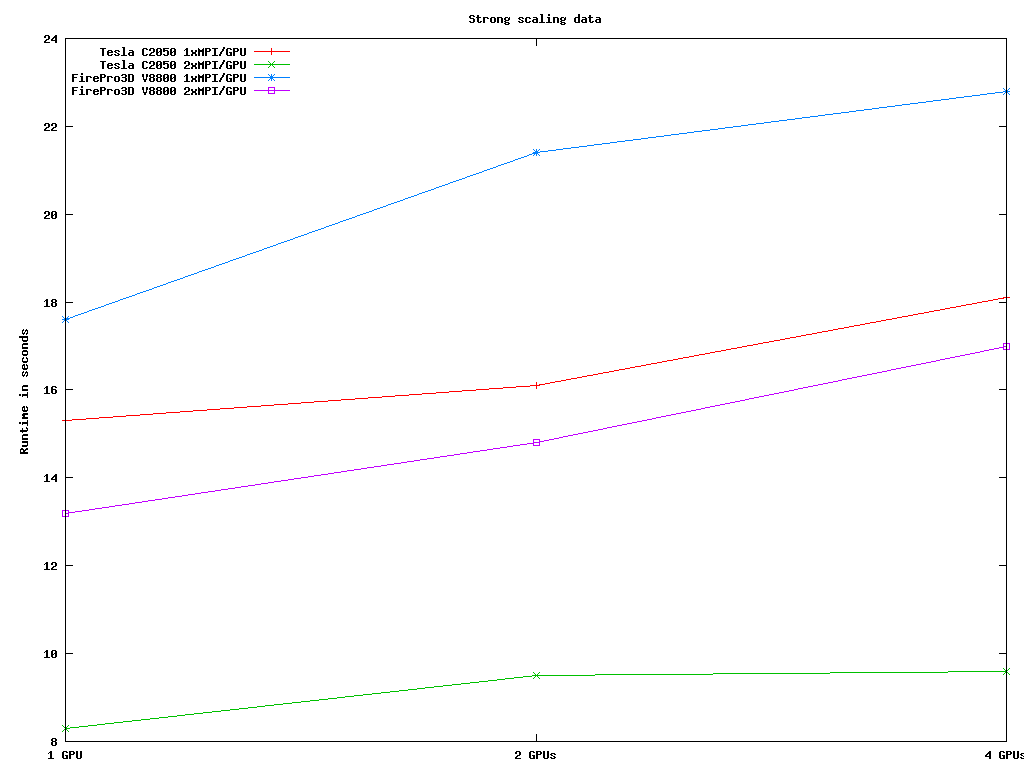

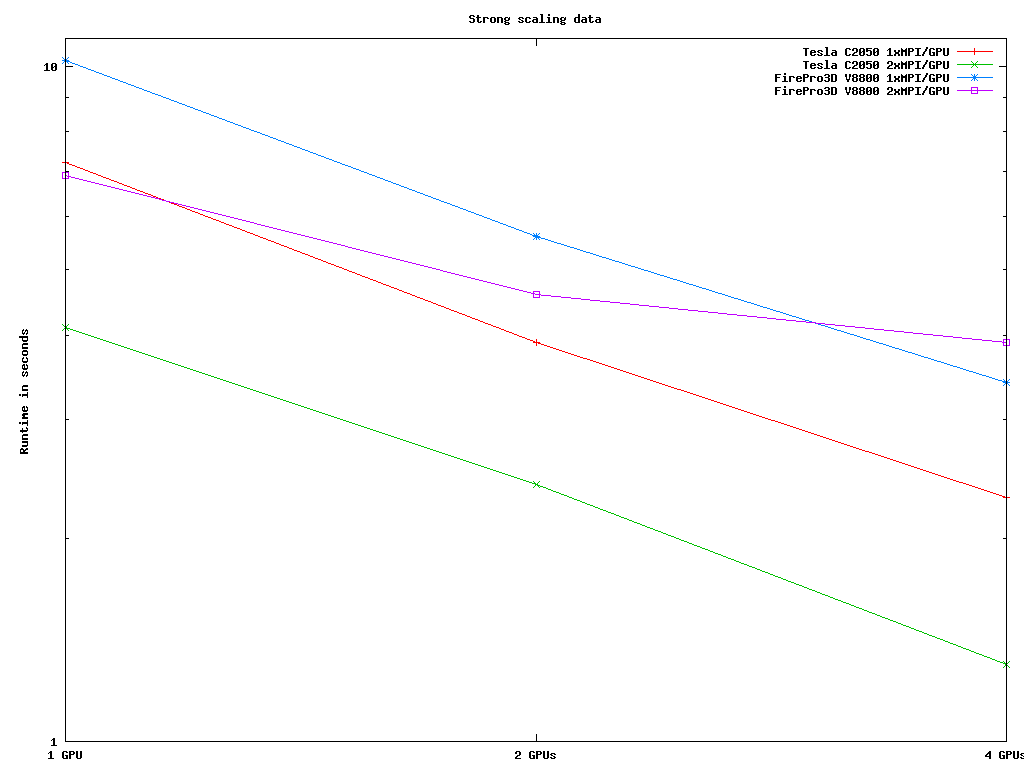

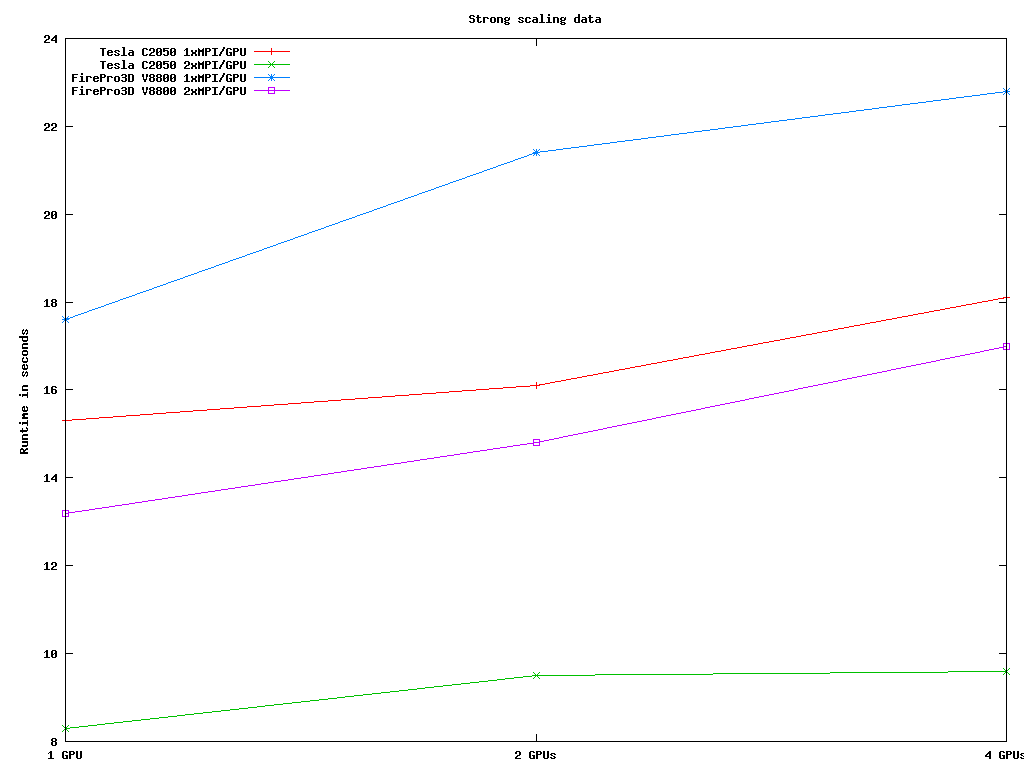

over the last few days i have been able to set

up a machine with four GPUs donated by AMD

and it is looking quite good (see attached images)

compared to NVIDIA Tesla GPUs using CUDA

those will work with the OpenCL version as well

for as long as you have a recent nvidia driver),

but it would be nice to have tests on more

diverse hardware, and this seems like a nice

holiday project after you get bored from stuffing

yourself with turkey, mashed potatoes, gravy

and stuffing, eh?

for the rest: happy t-day.

cheers,

axel.

Hi Axel,

I understand that the OpenCL version of the GPU package is no longer supported by the developers.

But perhaps somebody has already met (or even solved) the problem with the memory leakage during GPU-OpenCL execution?

I consider LAMMPS (11Aug17) built with gcc 4.3.3 and MPICH version 3.0.4 with either CUDA of OpenCL version of the GPU package.

For the tests I have used the in-file from examples/melt replicated by 4x4x4 (the tests for other models shows the same problem).

If I build LAMMPS with the CUDA version of the GPU package everything works well.

However the OpenCL version demonstrates gradual memory leakage during the MD run that eventually ends with a segfault-type error when 100% of the memory is utilised.

I have tested OpenCL version with GTX1070 and FireProS9150 cards.

In both cases, there is this memory leakage issue.

So it seems to not the GPU driver problem.

What could be the reason of such and erroneous behaviour of the OpenCL version?

Any hints where I should look for this problem in the code?

Kind regards,

Vladimir