I have been looking at using KOKKOS to accelerate some molecular simulations using the class 2 force fields. I’m seeing pretty reasonable speed-ups and started playing with some of the KOKKOS package options to see if I could squeeze out a little more performance. I’m seeing some results in the performance timings that I don’t understand and was hoping someone might have some guidance on.

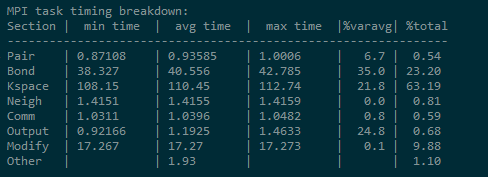

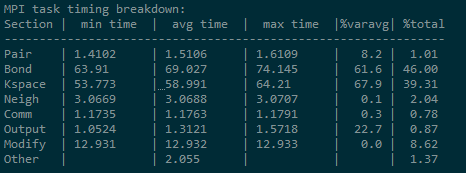

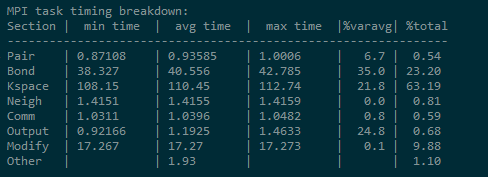

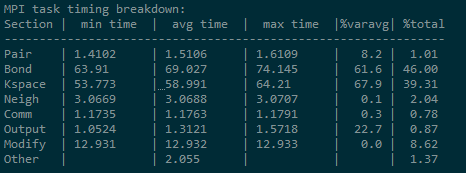

For the pair_style lj/class2/coul/long, one specifies a global cutoff and an optional Coulomb cutoff. When I change the coulombic cutoff to longer values, I see a sizeable increase in the Bond timing. I have attached the timing results for two runs below. The simulations are identical except that in the first one, the Coulomb cutoff is 9.5 Angstroms and in the second it is 12.0 Angstroms. If I extend it up to 15 Angstroms, the average timing for the bonds increases to ~303.

I tried a similar comparison running on a CPU and found that the timing for the bonds stayed essentially constant as I expected. If someone has an explanation of why the Coulombic cutoff is affecting the bond timings, I would appreciate the guidance.

LAMMPS v2Aug2023

The timer category summaries are not representative when you have GPU acceleration and the GPU kernels are run concurrently with calculations on the CPU. If Pair is run on the GPU and Bond not, then the time for Bond may include the waiting time until the GPU kernel is completed and the data from the GPU memory has been transferred to the CPU memory.

Even in the case of CPU there is overlapping allowed for better performance. For accurate timing you need to use the timer sync command to enforce an MPI barrier between categories.

Thank you for the reply, Axel. I had forgotten about the timer sync option. I added it to my input script.

My understanding of the KOKKOS accelerated styles, fixes, etc. is that they all run on the GPU unless <style>/kk/host is specified in the input file. Do I have that correct? If so, my bond/angle/etc. should all be running on the GPU since they all have KOKKOS versions and I’m not specifying that they run on the host in the input script.

EDIT: I forgot to export this variable, so I’m rechecking the timings now that it is actually being set correctly.

I also set the CUDA_LAUNCH_BLOCKING environment variable for these timing tests. Am I understanding the documentation correctly that this should prevent overlap of computations on the CPU and GPU and give correct timings even if some styles are running on the CPU?

Yes see the note here: 7.4.3. KOKKOS package — LAMMPS documentation

To get an accurate timing breakdown between time spend in pair, kspace, etc., you must set the

environment variable CUDA_LAUNCH_BLOCKING=1. However, this will reduce performance and is

not recommended for production runs.

1 Like

After properly exporting the CUDA_LAUNCH_BLOCKING to 1, the timings made much more sense. There was a clear tradeoff between the pair and kspace with no appreciable change in the bond calculations as expected. Thanks to @akohlmey and @stamoor.

1 Like