Dear experts,

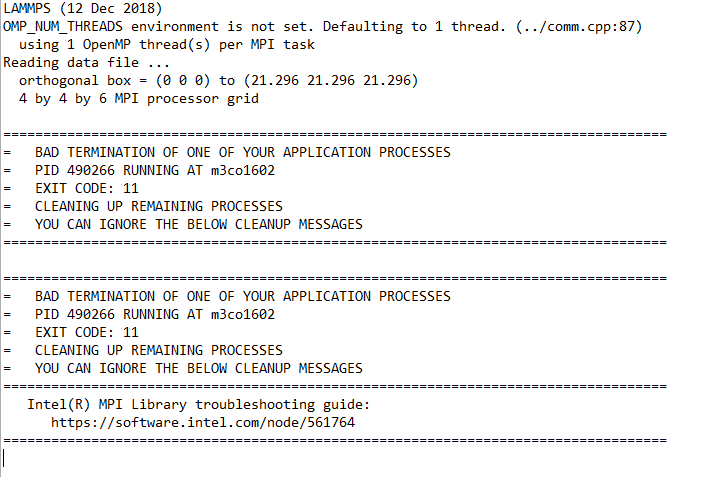

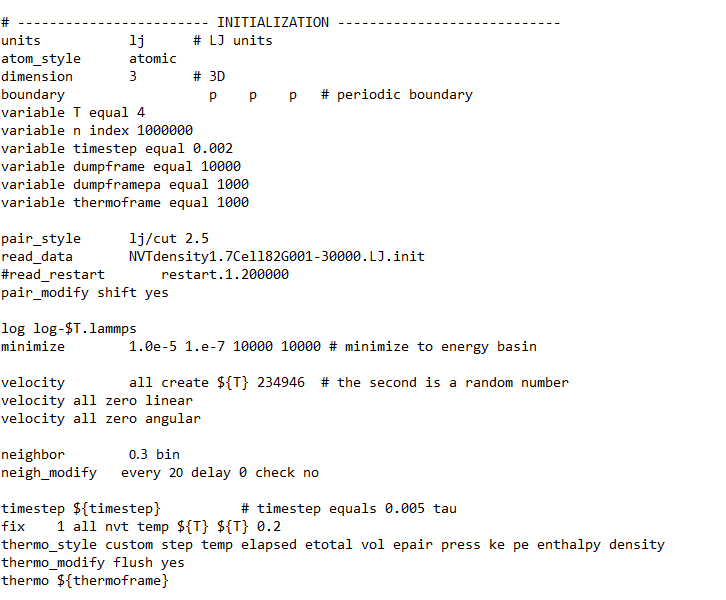

I use the LJ potential function, my total number of atoms is 16000, each atom represents a type, so I have 16000 atom types, so my potential parameter file is very large, I write the potential parameter information and the initial configuration position information in the same data file, about 3.7GB. I used parallel 96 cores to run LAMMPS, but there was an error like the title during the run (I also attached the error information). After my check, I guess the reason is that the input file is too large (I have run the same script with 4000 scale can run), so I have the following questions:

-

Is there any limit on the amount of data in LAMMPS data files? If there is a limit, does this error not occur if I set up the potential parameter file separately (about 3.6GB)?

-

I have attached the main code of my in file, and I would like to ask whether my neighbor related Settings are inappropriate in my case, which leads to this error?

The above are my questions. At the same time, I would like to ask how I should deal with this situation.

Nobody will seriously look into debugging an issue with a 5 year old LAMMPS version. There are so many issues that have been found and resolved since and so many enhancements added to LAMMPS that you are missing out big time.

There are multiple possible reasons, but they will be difficult to track down from the outside unless you provide the complete input deck and have verified that the issue still exists with the latest LAMMPS version (currently 2 August 2023).

The first item you should investigate is if and how the crash depends on the number of MPI processes. Using 96 MPI processes for just 16000 LJ atoms is likely very inefficient, doing so for 4000 would be ridiculous and likely slowing your down more than speeding up.

For a plain LJ potential, you will be hard pressed to see a measurable speedup after reaching fewer than 1000 atoms per MPI rank. With such an extreme ratio, a likely cause is that you have no atoms in a specific processor’s subdomain.

Beyond that, you have to use regular programming tools like valgrind or a debugger to at least identify the location of the crash (11.4. Debugging crashes — LAMMPS documentation). E.g. if you can manage to have your program create core dumps and then investigate those, you can get a stack trace and we can look at those.

But again, unless the issue can be reproduced with the current LAMMPS development version, there will be no fix (unless you fix it yourself), if at all needed.