Hello.

I’m Japanese student. If there is something wrong in my question , please let me know that.

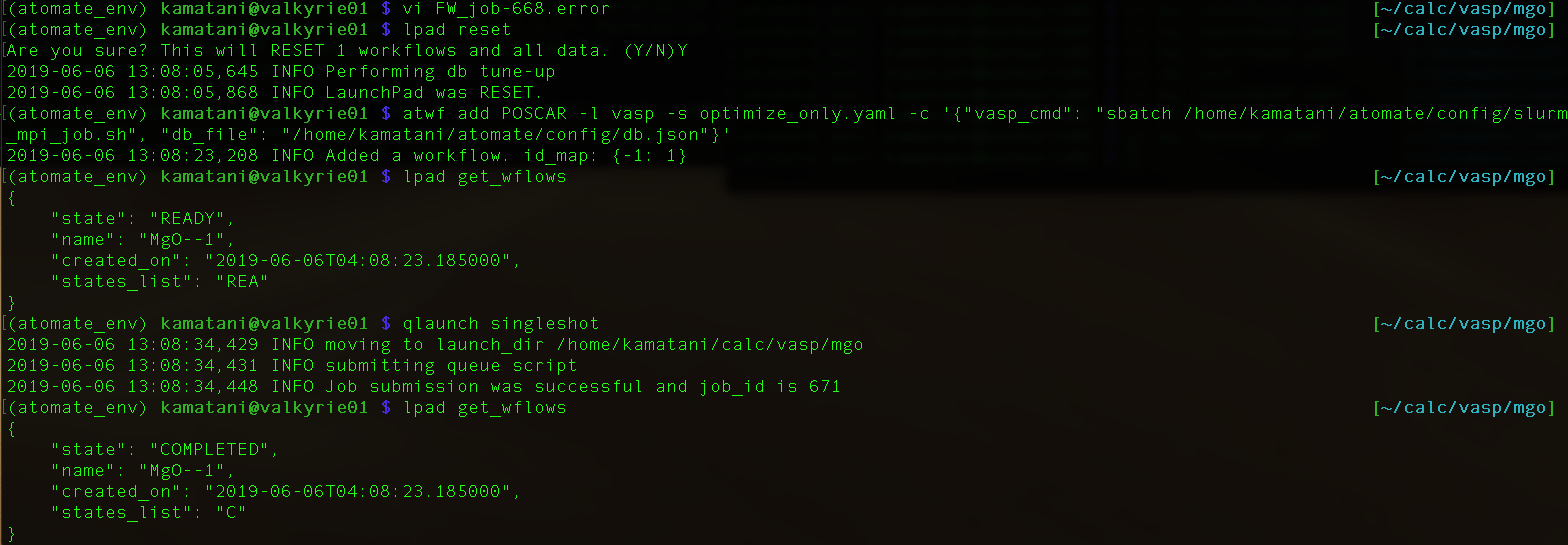

I’m trying “Installing atomate”, and finished “Submit the workflow” . However, the error in “FW_job-***.error” occurred like:

Validation failed: <custodian.vasp.validators.VasprunXMLValidator object at 0x150f6451eb70>

Traceback (most recent call last):

File “/home/kamatani/.pyenv/versions/3.7.3/lib/python3.7/site-packages/fireworks/core/rocket.py”, line 262, in run

m_action = t.run_task(my_spec)

File “/home/kamatani/.pyenv/versions/3.7.3/lib/python3.7/site-packages/atomate/vasp/firetasks/run_calc.py”, line 205, in run_task

c.run()

File “/home/kamatani/.pyenv/versions/3.7.3/lib/python3.7/site-packages/custodian/custodian.py”, line 328, in run

self._run_job(job_n, job)

File “/home/kamatani/.pyenv/versions/3.7.3/lib/python3.7/site-packages/custodian/custodian.py”, line 452, in _run_job

raise ValidationError(s, True, v)

custodian.custodian.ValidationError: Validation failed: <custodian.vasp.validators.VasprunXMLValidator object at 0x150f6451eb70>

In “Add a workflow”, I used the command that atwf add -l vasp -s optimize_only.yaml -m mp-149 -c ‘{“vasp_cmd”: “sbatch /home/kamatani/atomate/config/slurm_mpi_job.sh”, “db_file”: “/home/kamatani/atomate/config/db.json”}’

I don’t know what the cause was.

please help me.