I completed ATOMATE2 setup, ran sample relax and band structure workflows. However, the output files were all zipped. I wonder why this happening. When I tried to query my database for results, it just print “NONE”.

Also in the job log file, I saw this error: WARNING Response.stored_data is not supported with local manager.

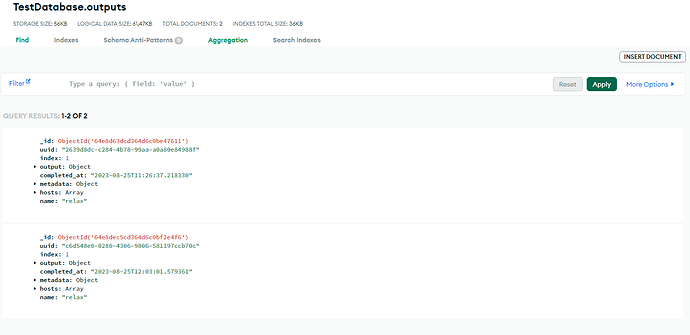

I am encountering the same problem after running the test relax workflow described on the atomate2 page. I unzipped the output files, and the relaxation job completed correctly. Logging into my MongoDB database, I see the attached screen in my outputs folder. I’m not sure what to make of this. It seems as though communication has been established with the MongoDB database since information about the jobs is stored there, but I’m not sure why there is an issue uploading the outputs to the database. I’m new to this forum and don’t know how to add this to the atomate2 category, but it’s currently in the atomate category which might not be the right area since the question is about atomate2.

Please, were you able to finally resolve this issue? Any assistance you can render would be appreciated

Hi, I also have the similar problem. Have you solved the problem?

I’ve been working on other matters lately, but I’ll let you know if I resolve the issue when I come back to it.

Hello everyone,

Any luck figuring out this issue? I’m also facing the same issue, in fact also for JSONStore.

I stumbled across this thread while searching for some other stuff. But I can offer some info here. The files are zipped because that is standard procedure by the custodian package while excuting the VASP calculations. If they are zipped then it is most likely that data is already stored on the results db. The warning abount stored_data is not related. It is just some addtional data used to make sure jobflow works properly with fireworks.

The results should be stored in your JOB_STORE depending on how you configured your joblow. Please make sure that basic jobflow excution is working properly and is writing to the db. If that works then the atomate2 part should work automatically.

Hi @QueryQuark, just to confirm, it looks like everything has worked successfully. The outputs of your jobs have been added to the database. If you click on the little arrow next to “output” it will show you the output task document of your job.

To access the outputs you can run:

from jobflow import SETTINGS

store = SETTINGS.JOB_STORE

store.connect()

results = store.query_one()

Provided you have configured the jobflow.yaml file on the computer where you are running the query, it should return some outputs from your calculation.