Dear all,

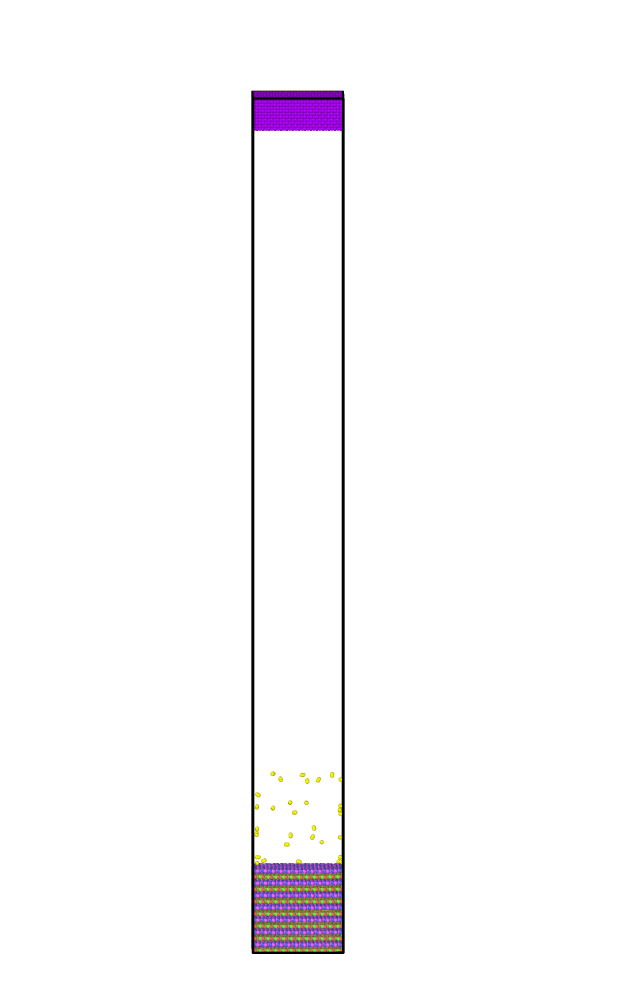

I have an orthogonal simulation box(50-50-500Angstroms) that has atoms on the z direction at both ends and the middle volume is empty as given below. I am investigating the decomposition behavior of the target material by applying heat via fix heat command so the middle section of the box would be filled during the simulation.

When I test my simulation on my desktop (i7-6700 - 4 cores) I’ve got 1.3 timestep/second performance with " fix balance all balance ${balanceupt} 1.1 rcb ‘’. However if I run the same script on the cluster my job hangs when using 8 or more cores.(lammps 3May2020 version using openmpi 4.0)

I’ve checked the mail list why the simulation hangs when using fix balance rcb and found there were the same questions regarding this issue but could not find the answer

Since I am using ReaxFF potential I decided to use the KOKKOS CUDA package.

My question is, Should I still use the fix balance command or similar ones? When I test my script with fix balance command simulation performance is getting lower since there are other fixes that Kokkos does not support in my script. I’ve tested my script removing all fixes and dumps with 4 gpu 10 cores. I’ve got 30 timestep/second timing which is fine. I’ve got 4 timestep/second timing with fixes and dumps . I’ve understood that the CPU is bottlenecking by changing the number of gpus from 1 to 4 gpus. performance is always the same with different numbers of GPUs. It may be because of the imbalance on the cores. Is there any way to increase performance using kokkos-cuda for my setup?

A simplified script is attached below. I can not add the data file due to the size limit.

Best Regards

Garip

units real

dimension 3

boundary p p f

atom_style charge

variable wobblex equal normal(0,5,1000)

variable wobbley equal normal(0,5,1000)

variable z equal ramp(0,-35)

variable numrun equal 100

variable num_dump equal 10

variable balanceupt equal 1

variable a_heat equal 20 #

read_data equilib.data

region heat cylinder z 25 25 8 45 150 side in move v_wobblex v_wobbley v_z units box

region tim block INF INF INF INF INF 8

group target region target

group tim dynamic all region tim every ${gupd}

pair_style reax/c NULL checkqeq no

pair_coeff * * ffield.reax.ni B B N N N N Ni

compute reax all pair reax/c

compute ent1 all entropy/atom 0.25 5

#neighbor 10.0 bin

#neigh_modify every 1 delay 10 check yes page 100000 cluster yes binsize 7

#comm_modify mode single cutoff 22.0 vel yes

#comm_style tiled

#fix balance all balance ${balanceupt} 1.1 rcb

velocity all create 300 23432 rot yes dist gaussian

fix zwalls all wall/reflect zlo EDGE zhi EDGE

fix 1 all nve

fix 7a target heat {num_dump} v_a_heat region heat

fix 3 tim temp/berendsen 300 300 {num_dump}

fix 2d all reax/c/species 1 10 ${num_dump} species.out element Bx By Nc Ny Nx Np Ni

dump 1 all custom ${num_dump} dump.atom* id type x y z vx vy vz fx fy fz q c_ke c_pe c_ent1

thermo 100

timestep 0.1

run ${numrun}