are you taking about -DKokkos_ENABLE_DEBUG=on or -DKokkos_ENABLE_CUDA_UVM=on ?

ill try to rebuild lammps tomorrow with both because im getting memory allocation errors:

Exception: Kokkos failed to allocate memory for label “atom:dihedral_atom1”. Allocation using MemorySpace named “Cuda” failed with the following error: Allocation of size 115.1 M failed, likely due to insufficient memory. (The allocation mechanism was cudaMalloc(). The Cuda allocation returned the error code “cudaErrorMemoryAllocation”.)

Exception: Kokkos failed to allocate memory for label “atom:dihedral_atom3”. Allocation using MemorySpace named “Cuda” failed with the following error: Allocation of size 116.3 M failed, likely due to insufficient memory. (The allocation mechanism was cudaMalloc(). The Cuda allocation returned the error code “cudaErrorMemoryAllocation”.)

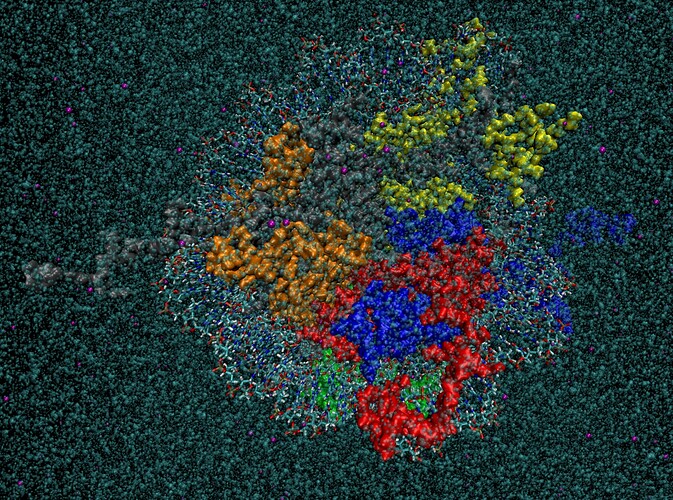

on my PDB 2CV5 GPU simulation:

614751 atoms

72 atom types

419078 bonds

123 bond types

243891 angles

267 angle types

81972 dihedrals

550 dihedral types

3524 impropers

25 improper types

974 crossterms

-93 93 xlo xhi

-93 93 ylo yhi

-93 93 zlo zhi

mpirun -np 32 ~/.local/bin/lmp -in step5_production.inp -k on g 4 -sf kk

echo screen

variable dcdfreq index 5000

variable outputname index step5_production

variable inputname index step4.1_equilibration

units real

boundary p p p

newton off

# --- IF KOKKOS ---

atom_style full/kk

bond_style harmonic/kk

angle_style charmm/kk

improper_style harmonic # try without kk to solve memory overflow

# --- TO BE IMPLEMENTED IN KOKKOS (BIG TODO)

# pair_style lj/charmmfsw/coul/long/kk 10 12

# dihedral_style charmmfsw/kk

# special_bonds charmm/kk

pair_style lj/charmmfsw/coul/long 10 12

dihedral_style charmmfsw

special_bonds charmm

# --- IF NOT KOKKOS ---

# kspace_style pppm 1e-4

# atom_style full

# bond_style harmonic

# angle_style charmm

# improper_style harmonic

pair_modify mix arithmetic

#kspace_modify fftbench yes

fix cmap all cmap charmmff.cmap

fix_modify cmap energy yes

read_data step3_input.data fix cmap crossterm CMAP

run_style verlet/kk

variable laststep file ${inputname}.dump

next laststep

read_dump ${inputname}.dump ${laststep} x y z vx vy vz ix iy iz box yes replace yes format native

kspace_style pppm/kk 1e-4

neighbor 2 bin

neigh_modify delay 5 every 1

include restraints/constraint_angletype

fix 1 all shake/kk 1e-6 500 0 m 1.008 a ${constraint_angletype}

fix 2 all npt/kk temp 303.15 303.15 100.0 iso 0.9869233 0.9869233 1000 couple xyz mtk no pchain 0

thermo 10

thermo_style one

dump lammpstrj all custom 10 3ft6.lammpstrj id mol type x y z ix iy iz

reset_timestep 0

timestep 2

variable ps loop 1000

label loop

print "================== TIME = $(v_ps/1000:%.3f) ns =================="

run 50

#write_restart 2cv5-solution-timestep_*.lammpsrestart

next ps

jump SELF loop

undump lammpstrj

worked fine CPU only but obviously pretty slow:

Kokkos GPU runs fine on a fairly minimal example PDB 3FT6

30374 atoms

20380 bonds

10782 angles

1448 dihedrals

38 impropers

45 atom types

66 bond types

128 angle types

240 dihedral types

11 improper types

-34.5000 34.5000 xlo xhi

-34.5000 34.5000 ylo yhi

-34.5000 34.5000 zlo zhi

but little hope at this point of getting to PDB 6HKT. charmmgui job has been running for 3 days. not even done solvation step yet (a lot more complicated than you would expect)

168697 !NATOM

174941 !NBOND: bonds

317565 !NTHETA: angles

462018 !NPHI: dihedrals

23247 !NIMPHI: impropers

17668 !NDON: donors

25274 !NACC: acceptors

5988 !NCRTERM: cross-terms

even the 3D printer at the faculty of engineering choked on that one !