Dear LAMMPS developer and users:

The current issue is an extension of this post: How to set the value of per-atom variables via python? - LAMMPS / LAMMPS Development - Materials Science Community Discourse

LAMMPS version: 20240829

System: CentOS 7

Python: 3.10.15

Following Axel Kohlmeyer’s advice, I now use fix external to acquire the dynamic external force. There is no bug in serial mode. But when I used MPI for parallel computing, many bugs emerged. The program to compute the external force must be in serial becuase it needs the properties of all the atoms in the system. All the quantities requested or scattered in the callback function have been defined in the LAMMPS input script (so the simulation can run normally in serial). Below are some simple Python callback functions I have tried.

from mpi4py import MPI

comm = MPI.COMM_WORLD

me = comm.Get_rank()

# F1

def callback(caller, step, nlocal, tag, x, fext):

comm.Barrier()

if me == 0:

foo = caller.numpy.extract_compute('foo',LMP_STYLE_GLOBAL,LMP_TYPE_SCALAR)

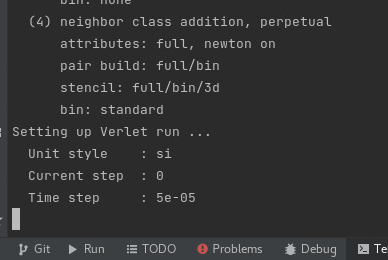

I ran mpirun -np 2 python3 test.py in terminal, the simulation could not start. It didn’t output any more after printing the time step:

A second callback function F2:

def callback(caller, step, nlocal, tag, x, fext):

comm.Barrier()

if me == 0:

n = caller.get_natoms()

b_info = caller.numpy.extract_compute('bInfo', LMP_STYLE_LOCAL, LMP_TYPE_ARRAY)

For F2, mpirun -np 2 or -np 4 actually ran very well (suprisingly), but -np 6 and more processors raised the error:

Exception ignored on calling ctypes callback function: <function lammps.set_fix_external_callback.<locals>.callback_wrapper at 0x7fa204c9a290>

Traceback (most recent call last):

File "lammps/core.py", line 2236, in callback_wrapper

callback(caller, ntimestep, nlocal, tag, x, f)

File "test.py", line 24, in callback

b_info = caller.numpy.extract_compute('bInfo', LMP_STYLE_LOCAL, LMP_TYPE_ARRAY)

File "lammps/numpy_wrapper.py", line 186, in extract_compute

return self.darray(value, nrows, ncols)

File "lammps/numpy_wrapper.py", line 495, in darray

ptr = cast(raw_ptr[0], POINTER(c_double * nelem * dim))

ValueError: NULL pointer access

F3

def callback(caller, step, nlocal, tag, x, fext):

comm.Barrier()

if me == 0:

var = np.zeros(10)

caller.scatter_atoms('d_strain', 1, 1, (var.size * c_double)(*var))

For F3, the bug is the same as that of F1, namely there was no more output after printing the time step.

F4 removed if me == 0:

def callback(caller, step, nlocal, tag, x, fext):

comm.Barrier()

foo = caller.numpy.extract_compute('foo',LMP_STYLE_GLOBAL,LMP_TYPE_SCALAR)

n = caller.get_natoms()

b_info = caller.numpy.extract_compute('bInfo', LMP_STYLE_LOCAL, LMP_TYPE_ARRAY)

var = np.zeros(10)

caller.scatter_atoms('d_strain', 1, 1, (var.size * c_double)(*var))

The simulation ran well for -np 2 but the results are not as expected. For -np 4 and more, the bug is the same as F1 and F3.

If comm.Barrier was removed, the bug is the same as that of F1 and F3 regardless of -np.

Another question I am pondering is that the arg fext is per-processor, but the external forces I compute are for all the processors…