Hello,

I would like to specify different numbers of nodes for running different fireworks in the same workflow, e.g. , using 1 node for coarse structure optimizations, and using 3 nodes for the subsequent accurate optimizations.

I may realize the idea by launching all the preposed fireworks with Adapter 1(set nnodes:1 and qlaunch rapidfire --nlaunches num1), and then launching the subsequent fireworks with Adapter 2(set nnodes:3 and qlaunch rapidfire --nlaunches num2). However, if I have added multiple workflows with such fireworks, is there any easy way to automatically launch the fireworks with a maxjob limit? (just like qlaunch rapidfire -m maxjob_number works)

Hi

You can either set multiple qadapters (like you proposed) or you can override queue adapter settings in the Firework itself. This is done by setting the “_queueadapter” field within the FW spec which will override queue adapter settings. More documentation is here:

If you do this all your Fireworks (even with different queue settings) should be able to run with the same qlaunch command.

Many thanks to you, Anubhav. Your suggestion of setting “_queueadapter” is very valuable, but this scheme requires reservation mode, which is undesirable to me.

I also ignored the Server-Worker Model of Fireworks in my proposal. Apart from specifying different qadapters, I may have to allocate different fireworkers for different types of fireworks in my proposal at the same time, which is indicated by this documentation:

https://materialsproject.github.io/fireworks/controlworker.html

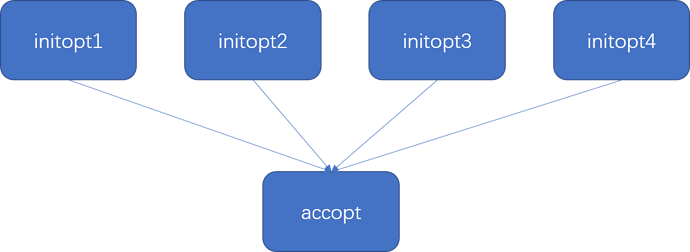

According to the documentation, I tested a workflow with the organization shown in the picture attachment, but still got some problems.

I set spec={"_category":“accopt”} for creating the “accopt” FW, while the spec parameter is default for the “initopt” FWs. After I added the workflow, I can naturally see the states of the 4 “initopt” fireworks were “READY”, while that of the “accopt” firework was “WAITING”, and then I executed

[jinlj@mu06 atomatemx]$ nohup qlaunch rapidfire -m 4 & disown

Soon after, when the “initopt” FWs were still “READY” and the “accopt” FW was still “WAITING”, I executed

[jinlj@mu06 atomatemx]$ nohup qlaunch -q my_qadapter2.yaml -w my_fworker2.yaml rapidfire -m 5 & disown

I expected such operations may let all the 5 FWs be launched automatically. However, only the “initopt” FWs were “COMPLETED” in the end. What mistakes did I make?

Here’s my files for setting default and customized qadapters and fireworkers:

my_fworker.yaml (395 Bytes)

my_fworker2.yaml (393 Bytes)

my_qadapter.yaml (296 Bytes)

my_qadapter2.yaml (296 Bytes)

Thank you very much for provding the suggestion, Anubhav. I’m now trying to realize my idea by using multiple qadapters and fireworkers, but still got a problem. (sorry for posting it in an isolated floor instead of your reply column)

I have confirmed that the problem happened in executing “qlaunch -w my_fworker2.yaml” commands. The state of the FW with a customized category still kept ‘READY’ after finishing the queue submitted by “qlaunch -w my_fworker2.yaml”, but the firework can be correctly finished after executing “rlaunch -w my_fworker2.yaml”. I also checked the “FW_job.out” after executing “qlaunch -w my_fworker2.yaml”, and the file only contains the following line:

2021-11-01 17:44:30,652 INFO Hostname/IP lookup (this will take a few seconds)

and doesn’t contain “INFO Rocket finished”. Do you know the possible causes?

I have found something new. After I directly switched the category of the default fworker (my_fworker.yaml) from “__none__” to “accopt”, I can correctly finish my corresponding test firework just with executing “qlaunch singleshot”. From my perspective, the problem may have relation with the environment variable “FW_CONFIG_FILE” that I set for default qlaunch before.

Hi,

Sorry I am not able to look at your detailed issues regarding configuration. However, if you have multiple Fireworkers with different queue configurations, etc. or do not want to use reservation mode then as you said the “_queueadapter” key likely would not work.

In that case, you would need to write your own wrapper script that runs multiple qlaunches for you. The wrapper script can check how many READY jobs exist in the database of each type (manually), and depending on the number of remaining jobs, make a decision of how many to qlaunch of each type of job. The script would then execute multiple qlaunch commands with the max limit set by the logic in the wrapper script, then sleep for some time, then redo the process again after checking how many jobs now exist in the database.

I’m very grateful to receive your reply, Anubhav. I will keep testing feasible schemes, including yours.