Hi @Germain,

I understand that the magnitudes of velocities were not precisely the same. If they were, I think (although not sure) you would not have been able to get out of the flying xtal state using the Nose-Hoover thermostat. I understood that your drawing is qualitative and it was just to illustrate your point.

I am not trying to say that there are not other problems with the initial input script that @jw_Xie used. But if @jw_Xie ran precisely the same simulation (with the same input file) 1 year ago versus now, I suppose that, given how strict you are suggesting that the calculations are, he would have gotten the same result, regardless of it being bogus results or not. Yet, in one case he managed to melt it and in another he didnt. This is so regardless of the “create velocity” and being stuck for a while in a flying xtal, since he didnt initialize velocities in neither of the two cases.

So, despite understanding that he should have initialized the system with velocity in order to do things correctly, I disagree that this is the reason why he got different results in the past vs now.

Now just one last comment on my side so that we dont start a conference on the topic:

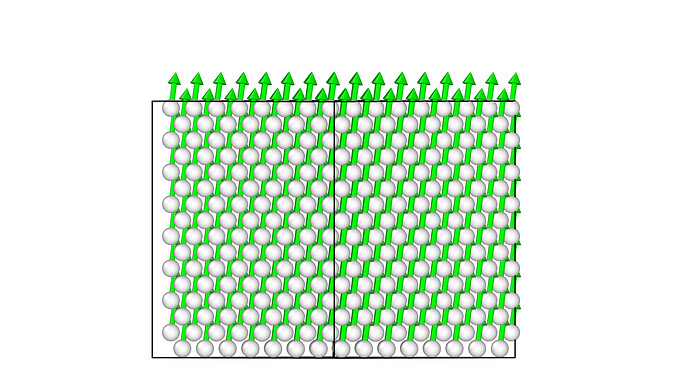

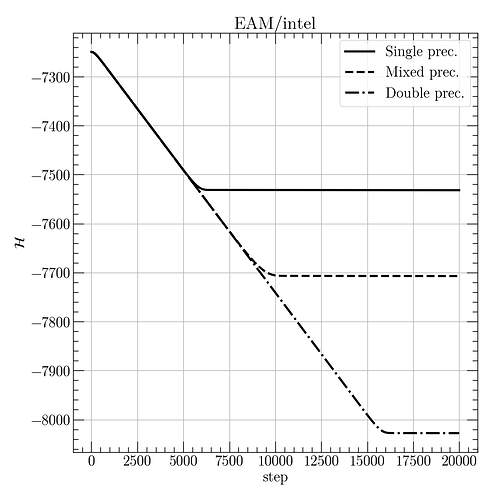

Be it due to floating points (and its propagation) or not, I have noticed that a calculation starting with a same input can lead to different microstates at a same precise time instant when I run the simulation at different machines - and I am talking of positions of atoms that are different by more than a 1E-10 value or whatever (see the example below). Of course the structure of my system is the same as so are the other macroscopic properties (otherwise we would all be lost). And of course that, in the case of phase transition, if the force field predicts an underlying melting temperature of X K for the system, the simuation in both machines would be able to picture the event with a same probability rate when I am at a same given temperature (which may however happen at different timesteps if indeed there is a difference in phase space trajectory or if the trajectories are meant to be the same but different by a given delta_value, I dont know). So, putting the other issues in the guy’s script aside and aiming to explain solely the difference in results from when he ran the simulation 1 year back versus now, I think @initialize may have a point when he talks about it “not being exactly reproducible” in a context where someone is trying to depict something such as a phase transition.

eg:

in two simulations done with a same precise input file, each made in a different machine (although I realize now that the amount of parallel processes is not the same, in case it adds up to something), I have, at time step 1m:

atom 238 at (0.865873, 5.3675667, 7.1528388) in one machine and at (0.61354, 5.60639, 6.97725) in the other machine.