Hi all,

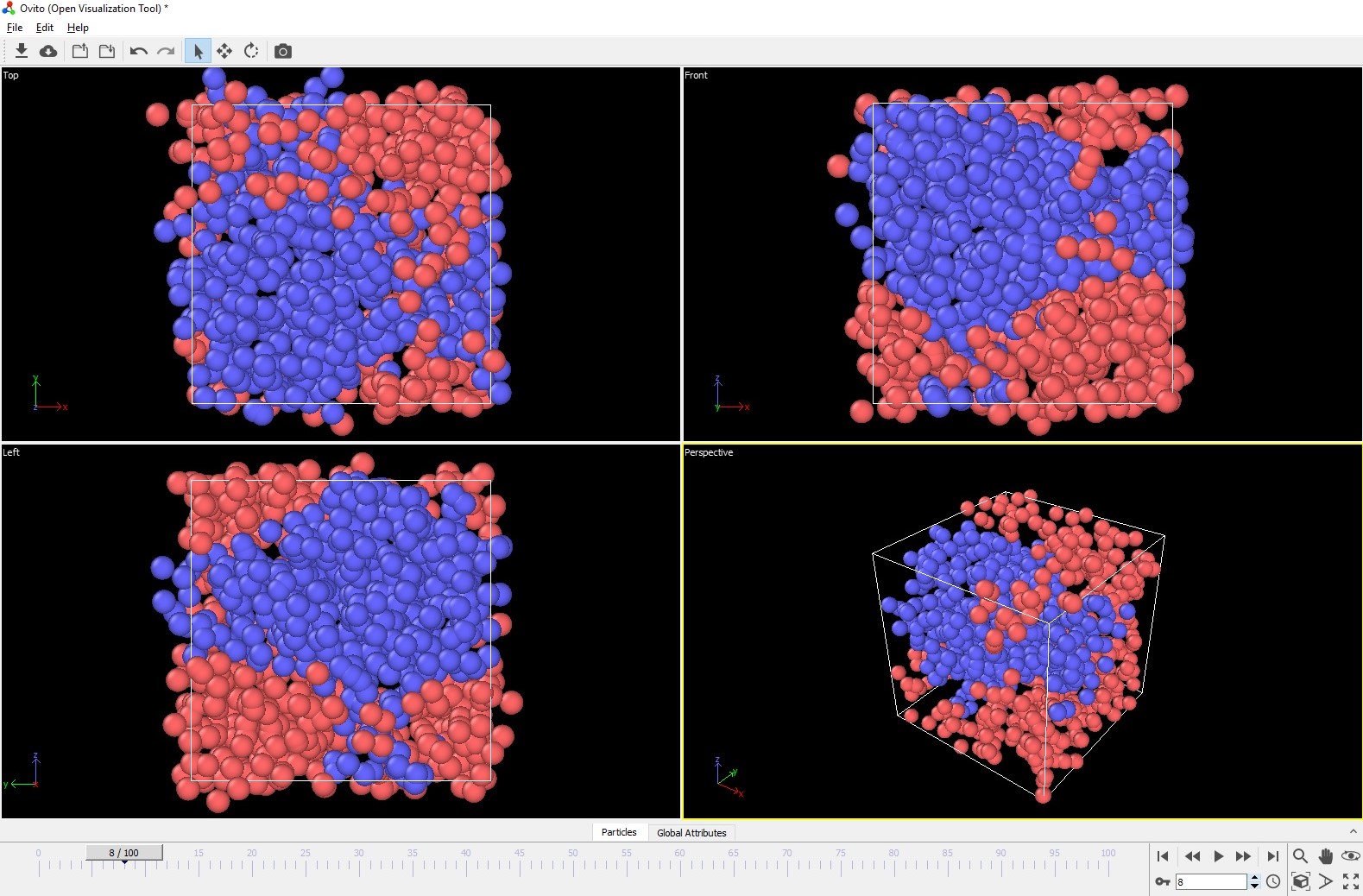

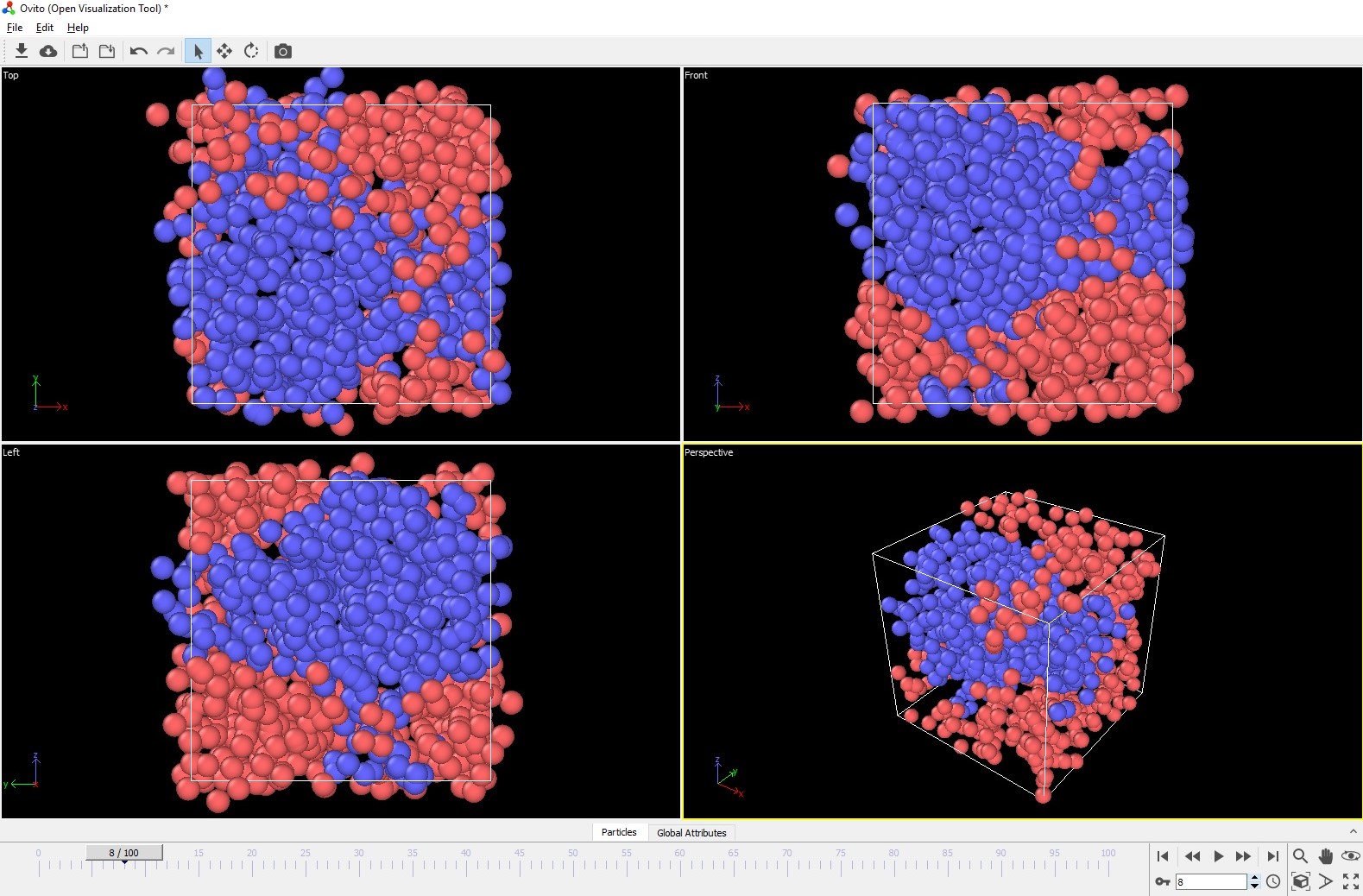

When visualizing a kspace-based simulation via a dump file, I occasionally see particles that are outside of the simulation box. I was curious does anyone know what happens to those particles? The code doesn’t say that the particles are out of the box (doesn’t spit out an error) and I can eliminate this visual “error” by explicitly telling LAMMPS to rebuild its neighbor list every time (neigh_modify every 1 delay 0 check no). However this is a fairly cumbersome command to run every step.

Are the densities of the particles that are visually “out of the box” get assigned to the grid points on the other side of the box?

Best,

Christian

From https://lammps.sandia.gov/doc/dump.html

“Because periodic boundary conditions are enforced only on timesteps when neighbor lists are rebuilt, the coordinates of an atom written to a dump file may be slightly outside the simulation box. Re-neighbor timesteps will not typically coincide with the timesteps dump snapshots are written. See the dump_modify pbc command if you with to force coordinates to be strictly inside the simulation box.”

enforcing dump outputs to keep coordinates within box boundaries does not answer the question.

the answer is in the code. especially the functions PPPM::particle_map() and PPPM::make_rho() as well as understanding how LAMMPS does domain decomposition and how PPPM works by spreading out the charge density over several grid points near the location of the charge (see the meaning of the “order” parameter of PPPM) . Due to the domain decomposition, periodic boundaries are not the relevant boundaries, but rather the subdomain boundaries are what matters. reading the source should clarify, that atoms not being exactly within subdomain boundaries is taken into account. if they were too far away, you get the (dreaded) “out of range atoms - cannot compute PPPM” error (or when their positions become NaN). the per-subdomain distributed “bricks” of density will have to be merged and remapped into the FFT compatible “pencils” before the 3d-FFT can be computed.

axel.

So if a particle is outside of the simulation box, it will be then mapped to some points that include the ghost points for that processor (points where the indices are A) greater than nlo_out and less than nlo_in or B) less than nhi_out and greater than nhi_in). Then during reverse_comm those ghost points for that processor are then sent to the processor that owns those points and those contributions are added to the owning processor’s copy via the reverse routines. This way, even if the particle is outside of the box in a dump visualization, it’s effect is still properly contributed to the part of the box that it reside in if the pbc had been rigidly enforced.

the per-subdomain distributed “bricks” of density will have to be merged and remapped into the FFT compatible “pencils” before the 3d-FFT can be computed

These routines are the reverse_comm and the brick2fft commands, respectively, correct?

So if the above is true, then does one need to worry about how frequently the neighbor lists are being reconstructed? How large an effect would updating a neighbor list every 1 step vs every 100 steps have on the system?

Christian