Good day, dear NOMAD-Team,

I am setting up a NOMAD-OASIS right now, and I have encountered with the following problem:

At the beginnig I was following this guidance:

and by try to bring the system to life via docker-compose up -d I get an error that

ERROR: for proxy Container "d24a1dcf7ce5" is unhealthy.

ERROR: Encountered errors while bringing up the project.

The current machine is inside the university network and uses proxy, I assumed that might be causing the problem. Running docker containers on private machine was successful, after switching to university vpn and setting university proxy, I get the same error message, which supports my assumption.

However, usage of following docker containers didn’t lead to an error

Do you have any ideas, what might be causing the problem and how to resolve it?

Hello, thanks for trying NOMAD Oasis.

Can you detail what you mean with proxy? Did you change the path prefix from nomad-oasis to something else? Is your goal to connect to the University server through the VPN or via a public proxy?

Could you also provide the logs of the app and proxy container (docker logs nomad_oasis_proxy, docker logs nomad_oasis_app) and your changed nginx.conf, nomad.yaml, and docker-compose.yaml.

Hello,

Since I didn’t know, what was caused the problem, I have downloaded the minimal example, no change in those files were made. I start the containers on my machine locally. Switching on research group VPN blocks creation of nomad_oasis_proxy. I append the full docker-compose log for both cases, with VPN off and on. Thus, access to OASIS must be in the future restricted with this particular VPN and would lay on a server with similar restrictions, it is important to find the reason, why it is blocking creation of this container. I greatly appreciate your help.

docker_compose_vnp_off.log (215.7 KB)

docker_compose_vnp_on.log (208.9 KB)

Log from another machine. Sadly proxy container was is not existing.

$ docker logs nomad_oasis_app

ERROR nomad.metainfo 2022-10-31T10:10:03 Fail to generate metainfo.

- nomad.commit: f476ce92

- nomad.deployment: oasis

- nomad.metainfo.exe_info: Unit NX_INT is not supported for delay_difference.

- nomad.metainfo.target_name: NXxpcs

- nomad.service: unknown nomad service

- nomad.version: 1.1.5

Folder structure:

nomad-oasis

|_

docker-compose.yaml

configs

|_

nginx.conf

nomad.yaml

services:

api_host: 'localhost'

api_base_path: '/nomad-oasis'

oasis:

is_oasis: true

uses_central_user_management: true

north:

jupyterhub_crypt_key: '57cb1dc9d829782656da3d5bd87dfe620bff65fec00ed660531ffca067521a68'

meta:

deployment: 'oasis'

deployment_id: 'my_oasis'

maintainer_email: '[email protected]'

mongo:

db_name: nomad_oasis_v1

elastic:

entries_index: nomad_oasis_entries_v1

materials_index: nomad_oasis_materials_v1

map $http_upgrade $connection_upgrade {

default upgrade;

'' close;

}

server {

listen 80;

server_name localhost;

proxy_set_header Host $host;

location / {

proxy_pass http://app:8000;

}

location ~ /nomad-oasis\/?(gui)?$ {

rewrite ^ /nomad-oasis/gui/ permanent;

}

location /nomad-oasis/gui/ {

proxy_intercept_errors on;

error_page 404 = @redirect_to_index;

proxy_pass http://app:8000;

}

location @redirect_to_index {

rewrite ^ /nomad-oasis/gui/index.html break;

proxy_pass http://app:8000;

}

location ~ \/gui\/(service-worker\.js|meta\.json)$ {

add_header Last-Modified $date_gmt;

add_header Cache-Control 'no-store, no-cache, must-revalidate, proxy-revalidate, max-age=0';

if_modified_since off;

expires off;

etag off;

proxy_pass http://app:8000;

}

location ~ /api/v1/uploads(/?$|.*/raw|.*/bundle?$) {

client_max_body_size 35g;

proxy_request_buffering off;

proxy_pass http://app:8000;

}

location ~ /api/v1/.*/download {

proxy_buffering off;

proxy_pass http://app:8000;

}

location /nomad-oasis/north/ {

proxy_pass http://north:9000;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header Host $host;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

# websocket headers

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection $connection_upgrade;

proxy_set_header X-Scheme $scheme;

proxy_buffering off;

}

}

version: "3"

services:

# broker for celery

rabbitmq:

restart: unless-stopped

image: rabbitmq:3.9.13

container_name: nomad_oasis_rabbitmq

environment:

- RABBITMQ_ERLANG_COOKIE=SWQOKODSQALRPCLNMEQG

- RABBITMQ_DEFAULT_USER=rabbitmq

- RABBITMQ_DEFAULT_PASS=rabbitmq

- RABBITMQ_DEFAULT_VHOST=/

volumes:

- rabbitmq:/var/lib/rabbitmq

healthcheck:

test: ["CMD", "rabbitmq-diagnostics", "--silent", "--quiet", "ping"]

interval: 10s

timeout: 10s

retries: 30

start_period: 10s

# the search engine

elastic:

restart: unless-stopped

image: docker.elastic.co/elasticsearch/elasticsearch:7.17.1

container_name: nomad_oasis_elastic

environment:

- ES_JAVA_OPTS=-Xms512m -Xmx512m

- discovery.type=single-node

volumes:

- elastic:/usr/share/elasticsearch/data

healthcheck:

test:

- "CMD"

- "curl"

- "--fail"

- "--silent"

- "http://elastic:9200/_cat/health"

interval: 10s

timeout: 10s

retries: 30

start_period: 60s

# the user data db

mongo:

restart: unless-stopped

image: mongo:5.0.6

container_name: nomad_oasis_mongo

environment:

- MONGO_DATA_DIR=/data/db

- MONGO_LOG_DIR=/dev/null

volumes:

- mongo:/data/db

- ./.volumes/mongo:/backup

command: mongod --logpath=/dev/null # --quiet

healthcheck:

test:

- "CMD"

- "mongo"

- "mongo:27017/test"

- "--quiet"

- "--eval"

- "'db.runCommand({ping:1}).ok'"

interval: 10s

timeout: 10s

retries: 30

start_period: 10s

# nomad worker (processing)

worker:

restart: unless-stopped

image: gitlab-registry.mpcdf.mpg.de/nomad-lab/nomad-fair:latest

container_name: nomad_oasis_worker

environment:

NOMAD_SERVICE: nomad_oasis_worker

NOMAD_RABBITMQ_HOST: rabbitmq

NOMAD_ELASTIC_HOST: elastic

NOMAD_MONGO_HOST: mongo

depends_on:

rabbitmq:

condition: service_healthy

elastic:

condition: service_healthy

mongo:

condition: service_healthy

volumes:

- ./configs/nomad.yaml:/app/nomad.yaml

- ./.volumes/fs:/app/.volumes/fs

command: python -m celery -A nomad.processing worker -l info -Q celery

# nomad app (api + proxy)

app:

restart: unless-stopped

image: gitlab-registry.mpcdf.mpg.de/nomad-lab/nomad-fair:latest

container_name: nomad_oasis_app

environment:

NOMAD_SERVICE: nomad_oasis_app

NOMAD_SERVICES_API_PORT: 80

NOMAD_FS_EXTERNAL_WORKING_DIRECTORY: "$PWD"

NOMAD_RABBITMQ_HOST: rabbitmq

NOMAD_ELASTIC_HOST: elastic

NOMAD_MONGO_HOST: mongo

depends_on:

rabbitmq:

condition: service_healthy

elastic:

condition: service_healthy

mongo:

condition: service_healthy

volumes:

- ./configs/nomad.yaml:/app/nomad.yaml

- ./.volumes/fs:/app/.volumes/fs

command: ./run.sh

healthcheck:

test:

- "CMD"

- "curl"

- "--fail"

- "--silent"

- "http://localhost:8000/-/health"

interval: 10s

timeout: 10s

retries: 30

start_period: 10s

# nomad remote tools hub (JupyterHUB, e.g. for AI Toolkit)

north:

restart: unless-stopped

image: gitlab-registry.mpcdf.mpg.de/nomad-lab/nomad-fair:latest

container_name: nomad_oasis_north

environment:

NOMAD_SERVICE: nomad_oasis_north

NOMAD_NORTH_DOCKER_NETWORK: nomad_oasis_network

NOMAD_NORTH_HUB_CONNECT_IP: north

NOMAD_NORTH_HUB_IP: "0.0.0.0"

NOMAD_NORTH_HUB_HOST: north

NOMAD_SERVICES_API_HOST: app

NOMAD_FS_EXTERNAL_WORKING_DIRECTORY: "$PWD"

NOMAD_RABBITMQ_HOST: rabbitmq

NOMAD_ELASTIC_HOST: elastic

NOMAD_MONGO_HOST: mongo

depends_on:

app:

condition: service_started

volumes:

- ./configs/nomad.yaml:/app/nomad.yaml

- ./.volumes/fs:/app/.volumes/fs

- /var/run/docker.sock:/var/run/docker.sock

user: '1000:991'

command: python -m nomad.cli admin run hub

healthcheck:

test:

- "CMD"

- "curl"

- "--fail"

- "--silent"

- "http://localhost:8081/nomad-oasis/north/hub/health"

interval: 10s

timeout: 10s

retries: 30

start_period: 10s

# nomad proxy (a reverse proxy for nomad)

proxy:

restart: unless-stopped

image: nginx:1.13.9-alpine

container_name: nomad_oasis_proxy

command: nginx -g 'daemon off;'

volumes:

- ./configs/nginx.conf:/etc/nginx/conf.d/default.conf

depends_on:

app:

condition: service_healthy

worker:

condition: service_started # TODO: service_healthy

north:

condition: service_healthy

ports:

- 80:80

volumes:

mongo:

name: "nomad_oasis_mongo"

elastic:

name: "nomad_oasis_elastic"

rabbitmq:

name: "nomad_oasis_rabbitmq"

keycloak:

name: "nomad_oasis_keycloak"

networks:

default:

name: nomad_oasis_network

I have tried to go back to the older version of the nomad-oasis and there is another issue the containers restarting all the time

$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

640d9d4026ef nginx:1.13.9-alpine "nginx -g 'daemon of…" About a minute ago Up 4 seconds 0.0.0.0:80->80/tcp, :::80->80/tcp nomad_oasis_gui

50831d1c0e2c gitlab-registry.mpcdf.mpg.de/nomad-lab/nomad-fair:latest "./run.sh" About a minute ago Restarting (1) 24 seconds ago nomad_oasis_app

154ca3d64272 gitlab-registry.mpcdf.mpg.de/nomad-lab/nomad-fair:latest "python -m celery wo…" About a minute ago Restarting (1) 14 seconds ago nomad_oasis_worker

a0949426cd1c docker.elastic.co/elasticsearch/elasticsearch:7.17.1 "/bin/tini -- /usr/l…" About a minute ago Up About a minute 9200/tcp, 9300/tcp nomad_oasis_elastic

51d31bc96c36 mongo:4 "docker-entrypoint.s…" About a minute ago Up About a minute 27017/tcp nomad_oasis_mongo

4c225205df6f rabbitmq:3.7.17 "docker-entrypoint.s…" About a minute ago Up About a minute 4369/tcp, 5671-5672/tcp, 25672/tcp nomad_oasis_rabbitmq

nomad_oasis_app.log (42.4 KB)

nomad_oasis_gui.log (2.2 KB)

nomad_oasis_worker.log (39.9 KB)

From the logs, I can see that the NOMAD services (app, worker) cannot reach the infrastructure service (like elasticsearch, or rabbitmq). I would assume that something goes wrong in the docker networking. I see similar problems both with and without VPN. I cannot see any issues with the configuration and I have to assume that something is wrong with your docker/networking setup.

This is really hard to troubleshoot remotely. One problem that other users reported in the past, was networking services (e.g. VPN) using IPs from the same range that docker users to assign IPs to containers. You can use different IP ranges, by adding a more config to your docker-compose.yaml

networks:

default:

name: nomad_oasis_network

ipam:

driver: default

config:

- subnet: "192.168.0.0/24"

Popular choices for IP ranges include:

- 192.168.0.0/16

- 172.16.0.0/16

- 10.0.0.0/24

With the older version, the config is just not matching the software. If you keep experimenting, you should use the version and config that you were using while posting this:

Since I didn’t know, what was caused the problem, I have downloaded the minimal example, no change in those files were made. I start the containers on my machine locally. Switching on research group VPN blocks creation of nomad_oasis_proxy . I append the full docker-compose log for both cases, with VPN off and on. Thus, access to OASIS must be in the future restricted with this particular VPN and would lay on a server with similar restrictions, it is important to find the reason, why it is blocking creation of this container. I greatly appreciate your help.

I have tried to make the offered change and that seems not to make any big difference.

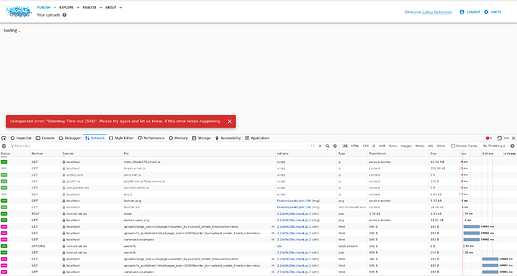

However, there is some interesting behavior that nomad_oasis_app shows. After being unhealthy for around 14 minutes it turns into healthy for some reason, which allows to open the GUI and interact with some parts of it. Upload section, however, it returns 504.

$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

45ec6e8c341d nginx:1.13.9-alpine "nginx -g 'daemon of…" 43 minutes ago Up 37 minutes 0.0.0.0:80->80/tcp, :::80->80/tcp nomad_oasis_proxy

227560fedbb8 gitlab-registry.mpcdf.mpg.de/nomad-lab/nomad-fair:latest "python -m nomad.cli…" 43 minutes ago Up 42 minutes (healthy) 8000/tcp, 9000/tcp nomad_oasis_north

5c7f2c180fbd gitlab-registry.mpcdf.mpg.de/nomad-lab/nomad-fair:latest "./run.sh" 43 minutes ago Up 43 minutes (healthy) 8000/tcp, 9000/tcp nomad_oasis_app

e18aa8661deb gitlab-registry.mpcdf.mpg.de/nomad-lab/nomad-fair:latest "python -m celery -A…" 43 minutes ago Up 43 minutes 8000/tcp, 9000/tcp nomad_oasis_worker

c6d69186e27e rabbitmq:3.9.13 "docker-entrypoint.s…" 43 minutes ago Up 43 minutes (healthy) 4369/tcp, 5671-5672/tcp, 15691-15692/tcp, 25672/tcp nomad_oasis_rabbitmq

111c3de4e202 mongo:5.0.6 "docker-entrypoint.s…" 43 minutes ago Up 43 minutes (healthy) 27017/tcp nomad_oasis_mongo

85bf9867fd5e docker.elastic.co/elasticsearch/elasticsearch:7.17.1 "/bin/tini -- /usr/l…" 43 minutes ago Up 43 minutes (healthy) 9200/tcp, 9300/

docker-compose_ip_192.168.0.0.log (106.9 KB)

It still look like a network problem. The reset look fine to me. Could you provide the logs of docker logs nomad_oasis_app in this new setting. The nomad_oasis_app is supposed to answer the requests that timeout here.

There still seem to be network problems. The nomad app cannot reach our user-management servers at https://nomad-lab.eu.