Hi Anubhav, thank you so much for your response. The POTCAR warnings seem to show up every time I generate VASP files from Pymatgen - its always something I tend to ignore (I’ve seen these show up for completely successful VASP calculations). That said, I checked the VASP files and the POTCAR.gz seemed to have generated normally. The INCAR, KPOINTS, and POSCAR files look good too. I believe VASP is not running at all. From what I can gather, the calculation fails because no vasp executable is created or found. Consequently, no OUTCAR file is generated.

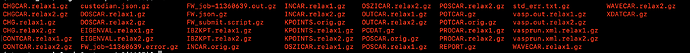

The VASP job is a structure relaxation of Si, which I am running as part of an atomate set-up tutorial (linked in my initial post) to get atomate running properly on the HPC I use (TACC Stampede2). The VASP files are entirely generated using atomate and are sourced from Pymatgen. I can send you the contents of the POTCAR, POSCAR, INCAR, or KPOINTS files if you believe it would be helpful, but I believe the issue is not related to them. The following files are generated in the launch directory:

The contents of these files, excluding the VASP input files, is as follows:

vasp.out.gz:

‘’’

TACC: Starting up job 11354959

TACC: Starting parallel tasks…

Usage: ./mpiexec [global opts] [exec1 local opts] : [exec2 local opts] : ...

Global options (passed to all executables):

Global environment options:

-genv {name} {value} environment variable name and value

-genvlist {env1,env2,...} environment variable list to pass

-genvnone do not pass any environment variables

-genvall pass all environment variables not managed

by the launcher (default)

Other global options:

-f {name} | -hostfile {name} file containing the host names

-hosts {host list} comma separated host list

-configfile {name} config file containing MPMD launch options

-machine {name} | -machinefile {name}

file mapping procs to machines

-pmi-connect {nocache|lazy-cache|cache}

set the PMI connections mode to use

-pmi-aggregate aggregate PMI messages

-pmi-noaggregate do not aggregate PMI messages

-trace {<libraryname>} trace the application using <libraryname>

profiling library; default is libVT.so

-trace-imbalance {<libraryname>} trace the application using <libraryname>

imbalance profiling library; default is libVTim.so

-check-mpi {<libraryname>} check the application using <libraryname>

checking library; default is libVTmc.so

-ilp64 Preload ilp64 wrapper library for support default size of integer 8 bytes

-mps start statistics gathering for MPI Performance Snapshot (MPS)

-trace-pt2pt collect information about

Point to Point operations

-trace-collectives collect information about

Collective operations

-tune [<confname>] apply the tuned data produced by

the MPI Tuner utility

-use-app-topology <statfile> perform optimized rank placement based statistics

and cluster topology

-noconf do not use any mpiexec's configuration files

-branch-count {leaves_num} set the number of children in tree

-gwdir {dirname} working directory to use

-gpath {dirname} path to executable to use

-gumask {umask} mask to perform umask

-tmpdir {tmpdir} temporary directory for cleanup input file

-cleanup create input file for clean up

-gtool {options} apply a tool over the mpi application

-gtoolfile {file} apply a tool over the mpi application. Parameters specified in the file

Local options (passed to individual executables):

Local environment options:

-env {name} {value} environment variable name and value

-envlist {env1,env2,...} environment variable list to pass

-envnone do not pass any environment variables-envall pass all environment variables (default)

Other local options:

-host {hostname} host on which processes are to be run

-hostos {OS name} operating system on particular host

-wdir {dirname} working directory to use

-path {dirname} path to executable to use

-umask {umask} mask to perform umask

-n/-np {value} number of processes

{exec_name} {args} executable name and arguments

Hydra specific options (treated as global):

Bootstrap options:

-bootstrap bootstrap server to use

(ssh rsh pdsh fork slurm srun ll llspawn.stdio lsf blaunch sge qrsh persist service pbsdsh)

-bootstrap-exec executable to use to bootstrap processes

-bootstrap-exec-args additional options to pass to bootstrap server

-prefork use pre-fork processes startup method

-enable-x/-disable-x enable or disable X forwarding

Resource management kernel options:

-rmk resource management kernel to use (user slurm srun ll llspawn.stdio lsf blaunch sge qrsh pbs cobalt)

Processor topology options:

-binding process-to-core binding mode

Extended fabric control options:

-rdma select RDMA-capable network fabric (dapl). Fallback list is ofa,tcp,tmi,ofi

-RDMA select RDMA-capable network fabric (dapl). Fallback is ofa

88,1 47%-dapl select DAPL-capable network fabric. Fallback list is tcp,tmi,ofa,ofi

-DAPL select DAPL-capable network fabric. No fallback fabric is used

-ib select OFA-capable network fabric. Fallback list is dapl,tcp,tmi,ofi

-IB select OFA-capable network fabric. No fallback fabric is used

-tmi select TMI-capable network fabric. Fallback list is dapl,tcp,ofa,ofi

-TMI select TMI-capable network fabric. No fallback fabric is used

-mx select Myrinet MX* network fabric. Fallback list is dapl,tcp,ofa,ofi

-MX select Myrinet MX* network fabric. No fallback fabric is used

-psm select PSM-capable network fabric. Fallback list is dapl,tcp,ofa,ofi

-PSM select PSM-capable network fabric. No fallback fabric is used

-psm2 select Intel* Omni-Path Fabric. Fallback list is dapl,tcp,ofa,ofi

-PSM2 select Intel* Omni-Path Fabric. No fallback fabric is used

-ofi select OFI-capable network fabric. Fallback list is tmi,dapl,tcp,ofa

-OFI select OFI-capable network fabric. No fallback fabric is used

Checkpoint/Restart options:

-ckpoint {on|off} enable/disable checkpoints for this run

-ckpoint-interval checkpoint interval

-ckpoint-prefix destination for checkpoint files (stable storage, typically a cluster-wide file system)

-ckpoint-tmp-prefix temporary/fast/local storage to speed up checkpoints

-ckpoint-preserve number of checkpoints to keep (default: 1, i.e. keep only last checkpoint)

-ckpointlib checkpointing library (blcr)

-ckpoint-logfile checkpoint activity/status log file (appended)

-restart restart previously checkpointed application

-ckpoint-num checkpoint number to restart

Demux engine options:

-demux demux engine (poll select)

116,1 71%Debugger support options:

-tv run processes under TotalView

-tva {pid} attach existing mpiexec process to TotalView

-gdb run processes under GDB

-gdba {pid} attach existing mpiexec process to GDB

-gdb-ia run processes under Intel IA specific GDB

Other Hydra options:

-v | -verbose verbose mode

-V | -version show the version

-info build information

-print-rank-map print rank mapping

-print-all-exitcodes print exit codes of all processes

-iface network interface to use

-help show this message

-perhost <n> place consecutive <n> processes on each host

-ppn <n> stand for "process per node"; an alias to -perhost <n>

-grr <n> stand for "group round robin"; an alias to -perhost <n>

-rr involve "round robin" startup scheme

-s <spec> redirect stdin to all or 1,2 or 2-4,6 MPI processes (0 by default)

-ordered-output avoid data output intermingling

-profile turn on internal profiling

-l | -prepend-rank prepend rank to output

-prepend-pattern prepend pattern to output

-outfile-pattern direct stdout to file

-errfile-pattern direct stderr to file

-localhost local hostname for the launching node

-nolocal avoid running the application processes on the node where mpiexec.hydra started

Intel(R) MPI Library for Linux* OS, Version 2017 Update 3 Build 20170405 (id: 17193)

Copyright (C) 2003-2017, Intel Corporation. All rights reserved.

TACC: MPI job exited with code: 255

TACC: Shutdown complete. Exiting.

‘’’

std_err.text.gz:

‘’’

[mpiexec/c401-021.stampede2.tacc.utexas.edu] set_default_values (…/…/ui/mpich/utils.c:4663): no executable provided

[mpiexecc401-021.stampede2.tacc.utexas.edu] HYD_uii_mpx_get_parameters (../../ui/mpich/utils.c:5151): setting default values failed

‘’’

FW.json.gz:

‘’’

{

“spec”: {

“_tasks”: [

{

“structure”: {

“module”: “pymatgen.core.structure”,

“class”: “Structure”,

“charge”: null,

“lattice”: {

“matrix”: [

[

0.0,

2.734364,

2.734364

],

[

2.734364,

0.0,

2.734364

],

[

2.734364,

2.734364,

0.0

]

],

“a”: 3.8669746532647453,

“b”: 3.8669746532647453,

“c”: 3.8669746532647453,

“alpha”: 59.99999999999999,

“beta”: 59.99999999999999, “gamma”: 59.99999999999999,

“volume”: 40.88829284866483

},

“sites”: [

{

“species”: [

{

“element”: “Si”,

“occu”: 1

}

],

“abc”: [

0.25,

0.25,

0.25

],

“xyz”: [

1.367182,

1.367182,

1.367182

],

“label”: “Si”,

“properties”: {

“magmom”: 0.0

}

},

{

“species”: [

{ “element”: “Si”,

“occu”: 1

}

],

“abc”: [

0.0,

0.0,

0.0

],

“xyz”: [

0.0,

0.0,

0.0

],

“label”: “Si”,

“properties”: {

“magmom”: 0.0

}

}

]

},

“vasp_input_set”: {

“module”: “pymatgen.io.vasp.sets”,

“class”: “MPRelaxSet”,

“version”: null,

“structure”: {

“module”: “pymatgen.core.structure”,

“class”: “Structure”,

“charge”: null, “lattice”: {

“matrix”: [

[

0.0,

2.734364,

2.734364

],

[

2.734364,

0.0,

2.734364

],

[

2.734364,

2.734364,

0.0

]

],

“a”: 3.8669746532647453,

“b”: 3.8669746532647453,

“c”: 3.8669746532647453,

“alpha”: 59.99999999999999,

“beta”: 59.99999999999999,

“gamma”: 59.99999999999999,

“volume”: 40.88829284866483

}, “sites”: [

{

“species”: [

{

“element”: “Si”,

“occu”: 1

}

],

“abc”: [

0.25,

0.25,

0.25

],

“xyz”: [

1.367182,

1.367182,

1.367182

],

“label”: “Si”,

“properties”: {

“magmom”: 0.0

}

},

{

“species”: [

{

“element”: “Si”,

“occu”: 1 }

],

“abc”: [

0.0,

0.0,

0.0

],

“xyz”: [

0.0,

0.0,

0.0

],

“label”: “Si”,

“properties”: {

“magmom”: 0.0

}

}

]

},

“force_gamma”: true

},

“_fw_name”: “{{atomate.vasp.firetasks.write_inputs.WriteVaspFromIOSet}}”

},

{

“vasp_cmd”: “>>vasp_cmd<<”,

“job_type”: “double_relaxation_run”,

“max_force_threshold”: 0.25,

“ediffg”: null,

“auto_npar”: “>>auto_npar<<”, “half_kpts_first_relax”: false,

“_fw_name”: “{{atomate.vasp.firetasks.run_calc.RunVaspCustodian}}”

},

{

“name”: “structure optimization”,

“_fw_name”: “{{atomate.common.firetasks.glue_tasks.PassCalcLocs}}”

},

{

“db_file”: “>>db_file<<”,

“additional_fields”: {

“task_label”: “structure optimization”

},

“_fw_name”: “{{atomate.vasp.firetasks.parse_outputs.VaspToDb}}”

}

]

},

“fw_id”: 107,

“created_on”: “2023-05-19T20:30:32.826035”,

“updated_on”: “2023-05-22T17:00:29.069422”,

“launches”: [

{

“fworker”: {

“name”: “Stampede2_normal”,

“category”: “none”,

“query”: “{}”,

“env”: {

“db_file”: “/home1/09341/jamesgil/atomate/config/db.json”,

“vasp_cmd”: “ibrun vasp_std>my_vasp.out”, “scratch_dir”: “/scratch/09341/jamesgil”

}

},

“fw_id”: 107,

“launch_dir”: “/scratch/09341/jamesgil/atomate_test/block_2023-05-19-16-58-29-771445/launcher_2023-05-22-16-59-33-230791”,

“host”: “c401-021.stampede2.tacc.utexas.edu”,

“ip”: “206.76.194.9”,

“trackers”: [],

“action”: null,

“state”: “RUNNING”,

“state_history”: [

{

“state”: “RUNNING”,

“created_on”: “2023-05-22T17:00:29.029631”,

“updated_on”: “2023-05-22T17:00:29.029646”

}

],

“launch_id”: 89

}

],

“state”: “RUNNING”,

“name”: “Si-structure optimization”

}

‘’’

custodian.json.gz:

‘’’

[

{

“job”: {

“module”: “custodian.vasp.jobs”,

“class”: “VaspJob”,

“version”: “2023.3.10”,

“vasp_cmd”: [

“ibrun”,

“vasp_std>my_vasp.out”

],

“output_file”: “vasp.out”,

“stderr_file”: “std_err.txt”,

“suffix”: “.relax1”,

“final”: false,

“backup”: true,

“auto_npar”: false,

“auto_gamma”: true,

“settings_override”: null,

“gamma_vasp_cmd”: null,

“copy_magmom”: false,

“auto_continue”: false

},

“corrections”: [],

“handler”: null,

“validator”: {

“module”: “custodian.vasp.validators”,

“class”: “VasprunXMLValidator”,

“version”: “2023.3.10”,

“output_file”: “vasp.out”,

“stderr_file”: “std_err.txt”

}, “max_errors”: false,

“max_errors_per_job”: false,

“max_errors_per_handler”: false,

“nonzero_return_code”: false

}

]

‘’’

FW_submit.script.gz:

‘’’

#!/bin/bash -l

#SBATCH --nodes=1

#SBATCH --ntasks-per-node=64

#SBATCH --time=4:00:00

#SBATCH --partition=normal

#SBATCH --account=TG-MAT210016

#SBATCH --job-name=FW_job

#SBATCH --output=FW_job-%j.out

#SBATCH --error=FW_job-%j.error

#SBATCH --mail-type=START,END

#SBATCH --mail-user=jamesgilumich.edu

cd /scratch/09341/jamesgil/atomate_test/block_2023-05-19-16-58-29-771445/launcher_2023-05-22-16-59-33-230791

rlaunch -c /home1/09341/jamesgil/atomate/config singleshot

# CommonAdapter (SLURM) completed writing Template

‘’’

FW_job-###.out.gz:

‘’’

2023-05-22 12:00:04,525 INFO Hostname/IP lookup (this will take a few seconds)

2023-05-22 12:00:04,538 INFO Launching Rocket

2023-05-22 12:00:29,493 INFO RUNNING fw_id: 107 in directory: /scratch/09341/jamesgil/atomate_test/block_2023-05-19-16-58-29-771445/launcher_2023-05-22-16-59-33-230791

2023-05-22 12:00:29,714 INFO Task started: {{atomate.vasp.firetasks.write_inputs.WriteVaspFromIOSet}}.

2023-05-22 12:00:29,956 INFO Task completed: {{atomate.vasp.firetasks.write_inputs.WriteVaspFromIOSet}}

2023-05-22 12:00:30,037 INFO Task started: {{atomate.vasp.firetasks.run_calc.RunVaspCustodian}}.

‘’’

The FW_job-####.error.gz file is identical to the one that is posted in my previous comment.

The job seems to be failing in atomate.vasp.firetasks.run_calc.RunVaspCustodian. Could this be a version control issue with Fireworks or Custodian? Have you seen this kind of problem before? Thanks again for your help, and please let me know if any other files or information would be helpful.