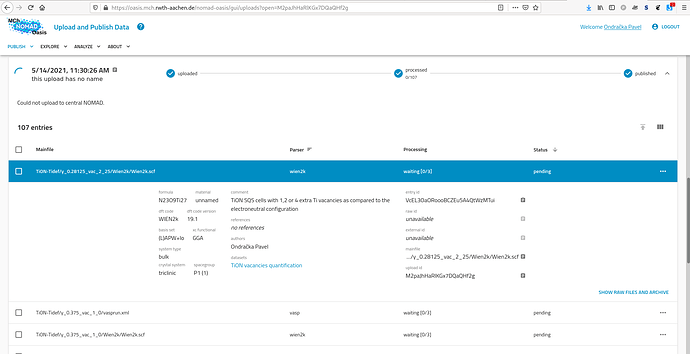

While trying to test upload to central nomad as part of bug "Publish upload to central NOMAD" does not work. · Issue #17 · nomad-coe/nomad · GitHub I’ve observed that my uploads page in our oasis is not working properly. Some older published uploads do not show the “published” globe icon (even though they are published), but rather just a rotating circle and the page keeps asking the server for the uploads data (and also the upload to central nomad button is gray/inactive for such uploads):

134.130.64.226 - - [26/May/2021:07:27:47 +0000] "GET /nomad-oasis/api/uploads/VFiP3BXJTwyVpAVgpjv31w?page=1&per_page=10&order_by=tasks_status&order=1 HTTP/1.1" 200 186727 "https://oasis.mch.rwth-aachen.de/nomad-oasis/gui/uploads" "Mozilla/5.0 (X11; Fedora; Linux x86_64; rv:86.0) Gecko/20100101 Firefox/86.0" "-"

134.130.64.226 - - [26/May/2021:07:27:47 +0000] "GET /nomad-oasis/api/uploads/OXk6jjQnQSmXwH-ug_DTsQ?page=1&per_page=10&order_by=tasks_status&order=1 HTTP/1.1" 200 162653 "https://oasis.mch.rwth-aachen.de/nomad-oasis/gui/uploads" "Mozilla/5.0 (X11; Fedora; Linux x86_64; rv:86.0) Gecko/20100101 Firefox/86.0" "-"

134.130.64.226 - - [26/May/2021:07:27:47 +0000] "GET /nomad-oasis/api/uploads/kAXXbFFGQ--8m7u0QsZt0w?page=1&per_page=10&order_by=tasks_status&order=1 HTTP/1.1" 200 50518 "https://oasis.mch.rwth-aachen.de/nomad-oasis/gui/uploads" "Mozilla/5.0 (X11; Fedora; Linux x86_64; rv:86.0) Gecko/20100101 Firefox/86.0" "-"

2021/05/26 07:27:47 [warn] 7#7: *12665 an upstream response is buffered to a temporary file /var/cache/nginx/proxy_temp/6/50/0000000506 while reading upstream, client: 134.130.64.226, server: oasis.mch.rwth-aachen.de, request: "GET /nomad-oasis/api/uploads/JbdlUTi8TTavwlUXDlro2A?page=1&per_page=10&order_by=tasks_status&order=1 HTTP/1.1", upstream: "http://172.26.0.6:8000/nomad-oasis/api/uploads/JbdlUTi8TTavwlUXDlro2A?page=1&per_page=10&order_by=tasks_status&order=1", host: "oasis.mch.rwth-aachen.de", referrer: "https://oasis.mch.rwth-aachen.de/nomad-oasis/gui/uploads"

134.130.64.226 - - [26/May/2021:07:27:47 +0000] "GET /nomad-oasis/api/uploads/W6pTuvAiSV-cfEd2cuLINA?page=1&per_page=10&order_by=tasks_status&order=1 HTTP/1.1" 200 199354 "https://oasis.mch.rwth-aachen.de/nomad-oasis/gui/uploads" "Mozilla/5.0 (X11; Fedora; Linux x86_64; rv:86.0) Gecko/20100101 Firefox/86.0" "-"

134.130.64.226 - - [26/May/2021:07:27:47 +0000] "GET /nomad-oasis/api/uploads/H9yxAWF2TamhzKJCJrbbXQ?page=1&per_page=10&order_by=tasks_status&order=1 HTTP/1.1" 200 51051 "https://oasis.mch.rwth-aachen.de/nomad-oasis/gui/uploads" "Mozilla/5.0 (X11; Fedora; Linux x86_64; rv:86.0) Gecko/20100101 Firefox/86.0" "-"

134.130.64.226 - - [26/May/2021:07:27:47 +0000] "GET /nomad-oasis/api/uploads/JbdlUTi8TTavwlUXDlro2A?page=1&per_page=10&order_by=tasks_status&order=1 HTTP/1.1" 200 185859 "https://oasis.mch.rwth-aachen.de/nomad-oasis/gui/uploads" "Mozilla/5.0 (X11; Fedora; Linux x86_64; rv:86.0) Gecko/20100101 Firefox/86.0" "-"

134.130.64.226 - - [26/May/2021:07:27:47 +0000] "GET /nomad-oasis/api/uploads/M2paJhHaRlKGx7DQaQHf2g?page=1&per_page=10&order_by=tasks_status&order=1 HTTP/1.1" 200 176592 "https://oasis.mch.rwth-aachen.de/nomad-oasis/gui/uploads" "Mozilla/5.0 (X11; Fedora; Linux x86_64; rv:86.0) Gecko/20100101 Firefox/86.0" "-"

134.130.64.226 - - [26/May/2021:07:27:48 +0000] "GET /nomad-oasis/api/uploads/VFiP3BXJTwyVpAVgpjv31w?page=1&per_page=10&order_by=tasks_status&order=1 HTTP/1.1" 200 186727 "https://oasis.mch.rwth-aachen.de/nomad-oasis/gui/uploads" "Mozilla/5.0 (X11; Fedora; Linux x86_64; rv:86.0) Gecko/20100101 Firefox/86.0" "-"

This is repeating over and over, with Firefox busy at two cores (and the server app container also having around 25% CPU utilization). I’m not opening a bug yet, as it is possible there might be some issue with the database (as I did some non-standard stuff previously How to start re-processing of undetected entries, so this might be some fallout)?

This is with an Oasis running latest v0.10.4.