Hello,

I have been trying to get the wf_elastic_constant() working on the supercomputing cluster I use (TACC Stampede2), but have faced a combination of struggles, the latest being this VasprunXMLValidator error in the middle of the workflow. I have successfully submitted and analyzed the results from a bandstructure workflow and a structure optimization workflow on this cluster, so I am certain that VASP and MongoDB work properly. However, I have run into greater issues with more complex workflows. Just to provide some context, my first problem that I ran into with the wf_elastic_constant() workflow was an IMPI environment failure:

in the vasp.out.gz:

c401-001.stampede2.tacc.utexas.edu.61754hfp_gen1_context_open: hfi_userinit_internal: failed, trying again (1/3)

c401-001.stampede2.tacc.utexas.edu.61754hfi_userinit_internal: assign_context command failed: Device or resource busy

c401-001.stampede2.tacc.utexas.edu.61754hfp_gen1_context_open: hfi_userinit_internal: failed, trying again (2/3)

c401-001.stampede2.tacc.utexas.edu.61754hfi_userinit_internal: assign_context command failed: Device or resource busy

c401-001.stampede2.tacc.utexas.edu.61754hfp_gen1_context_open: hfi_userinit_internal: failed, trying again (3/3)

TACC: MPI job exited with code: 254

TACC: Shutdown complete. Exiting.

and in the std_err.gz

c401-001.stampede2.tacc.utexas.edu.61737PSM2 can't open hfi unit: -1 (err=23)

c401-001.stampede2.tacc.utexas.edu.61757PSM2 can't open hfi unit: -1 (err=23)

c401-001.stampede2.tacc.utexas.edu.61770PSM2 can't open hfi unit: -1 (err=23)

[12] MPI startup(): tmi fabric is not available and fallback fabric is not enabled

[1] MPI startup(): tmi fabric is not available and fallback fabric is not enabled

[23] MPI startup(): tmi fabric is not available and fallback fabric is not enabled

I am working with a colleague who works on the same cluster as I do and faced the exact same issue, but he is working with the magnetic orderings workflow (He has posted here). Both of us find that this error seems to occur during the beginning stages of a complex workflow, but I have found it to occur in two distinct places:

-

During the structure optimization, after custodian corrects for INCAR parameters twice (first, changing ISMEAR = -5 to ISMEAR = 2, SIGMA=0.2 for metals through the LargeSigmaHandler, following by a reduction of SIGMA to 0.14 by the IncorrectSigmaHandler) The structure optimization completes sucessfully in the first round with ISMEAR = -5, but the VASP calculation is resubmitted by custodian with ISMEAR = 2 and SIGMA = 0.2. In the middle of the second calculation, the optimization stops abruptly (presumably because the entropy term is too large, triggering the LargeSigmaHandler), and a third calculation begins with SIGMA = 0.14. During this calculation, the above error was triggered.

-

During the start of the deformation steps - this was during a run where, prior to submitting the workflow, I altered the ISMEAR and SIGMA parameters preemptively to be better suited for metals so that custodian would not need to operate on the structure optimization. This time, the structure optimization completed, but the above error was triggered by the deformation steps (of which there are 23).

Based on this nature, I believe the error is related to overloading a compute node either by the number of tasks assigned to it or by the memory being overloaded.

Between messaging with the TACC staff and atomate developers, we were able to hypothesize that this error has either been caused by more than 48 tasks being assigned to the same compute node or due to the VASP environment not propagating correctly to new compute shells, resulting in a corrupted IMPI environment. I tried to resolve this issue by setting my qadaptor.yaml as follows:

_fw_name: CommonAdapter

_fw_q_type: SLURM

rocket_launch: rlaunch -c /home1/09341/jamesgil/atomate/config rapidfire --max_loops 3

nodes: 1

ntasks_per_node: 20

walltime: 48:00:00

queue: normal

account: TG-MAT210016

job_name: null

mail_type: "START,END"

mail_user: [email protected]

pre_rocket: conda activate atomate_env2; module load intel/17.0.4; module load impi/17.0.3; module load vasp/6.3.0

post_rocket: conda deactivate; module purge

logdir: /home1/09341/jamesgil/atomate/logs

The changes I made were as follows:

- I set my ntasks_per_node to 20 to prevent overloading a single node

- I added “module load” statements for all the modules i need to run vasp on a compute node as a pre-firework task, ensuring that any new shell opened to run a job script will load these modules

Sorry for the extensive background, but I want to give some proper context before introducing my latest error:

After making these changes, I am now getting a VasprunXMLValidator error in my FW_job-#####.error file (this is running the wf_elastic_constant() with pure metallic vanadium as the structure input (taken from materials project). My two global output files are as follows:

FW_job-######.out:

023-06-12 10:42:36,272 INFO Hostname/IP lookup (this will take a few seconds)

2023-06-12 10:43:23,531 INFO Created new dir /scratch/09341/jamesgil/atomate_test/elasticRegPrintVASPcmd/launcher_2023-06-12-15-43-23-528355

2023-06-12 10:43:23,534 INFO Launching Rocket

2023-06-12 10:43:24,204 INFO RUNNING fw_id: 551 in directory: /scratch/09341/jamesgil/atomate_test/elasticRegPrintVASPcmd/launcher_2023-06-12-15-43-23-528355

2023-06-12 10:43:24,417 INFO Task started: FileWriteTask.

2023-06-12 10:43:24,425 INFO Task completed: FileWriteTask

2023-06-12 10:43:24,504 INFO Task started: {{atomate.vasp.firetasks.write_inputs.WriteVaspFromIOSet}}.

2023-06-12 10:43:24,837 INFO Task completed: {{atomate.vasp.firetasks.write_inputs.WriteVaspFromIOSet}}

2023-06-12 10:43:24,917 INFO Task started: {{atomate.vasp.firetasks.run_calc.RunVaspCustodian}}.

vasp command loaded into custodian: ['ibrun', 'vasp_std']

2023-06-12 11:03:04,603 INFO Rocket finished

2023-06-12 11:03:05,065 INFO Sleeping for 60 secs

2023-06-12 11:04:05,126 INFO Checking for FWs to run...

2023-06-12 11:04:05,389 INFO Sleeping for 60 secs

2023-06-12 11:05:05,450 INFO Checking for FWs to run...

2023-06-12 11:05:05,745 INFO Sleeping for 60 secs

2023-06-12 11:06:05,806 INFO Checking for FWs to run...

NOTE: the line "vasp command loaded into custodian: " is a print statement I wrote in atomate/vasp/firetasks/run_calc.py to see what vasp command was actuallly passed into custodian, as per the recommandation here

FW_job-######.error:

Traceback (most recent call last):

File "/home1/09341/jamesgil/mambaforge/envs/atomate_env2/lib/python3.9/site-packages/custodian/vasp/validators.py", line 36, in check

Vasprun("vasprun.xml")

File "/home1/09341/jamesgil/mambaforge/envs/atomate_env2/lib/python3.9/site-packages/pymatgen/io/vasp/outputs.py", line 368, in __init__

self._parse(

File "/home1/09341/jamesgil/mambaforge/envs/atomate_env2/lib/python3.9/site-packages/pymatgen/io/vasp/outputs.py", line 481, in _parse

raise ex

File "/home1/09341/jamesgil/mambaforge/envs/atomate_env2/lib/python3.9/site-packages/pymatgen/io/vasp/outputs.py", line 396, in _parse

for event, elem in ET.iterparse(stream):

File "/home1/09341/jamesgil/mambaforge/envs/atomate_env2/lib/python3.9/xml/etree/ElementTree.py", line 1253, in iterator

yield from pullparser.read_events()

File "/home1/09341/jamesgil/mambaforge/envs/atomate_env2/lib/python3.9/xml/etree/ElementTree.py", line 1324, in read_events

raise event

File "/home1/09341/jamesgil/mambaforge/envs/atomate_env2/lib/python3.9/xml/etree/ElementTree.py", line 1296, in feed

self._parser.feed(data)

xml.etree.ElementTree.ParseError: not well-formed (invalid token): line 1037, column 0

ERROR:custodian.custodian:Validation failed: VasprunXMLValidator

Traceback (most recent call last):

File "/home1/09341/jamesgil/mambaforge/envs/atomate_env2/lib/python3.9/site-packages/fireworks/core/rocket.py", line 261, in run

m_action = t.run_task(my_spec)

File "/home1/09341/jamesgil/mambaforge/envs/atomate_env2/lib/python3.9/site-packages/atomate/vasp/firetasks/run_calc.py", line 295, in run_task

c.run()

File "/home1/09341/jamesgil/mambaforge/envs/atomate_env2/lib/python3.9/site-packages/custodian/custodian.py", line 382, in run

self._run_job(job_n, job)

File "/home1/09341/jamesgil/mambaforge/envs/atomate_env2/lib/python3.9/site-packages/custodian/custodian.py", line 504, in _run_job

raise ValidationError(s, True, v)

custodian.custodian.ValidationError: Validation failed: VasprunXMLValidator

INFO:rocket.launcher:Rocket finished

DEBUG:launchpad:Aggregation '[{'$match': {'$or': [{'spec._fworker': {'$exists': False}}, {'spec._fworker': None}, {'spec._fworker': 'Stampede2_skx-normal'}], 'spec._category': {'$exists': False}, 'state': {'$in': ['RUNNING', 'RESERVED']}}}, {'$project': {'fw_id': True, '_id': False}}]'.

INFO:rocket.launcher:Sleeping for 60 secs

INFO:rocket.launcher:Checking for FWs to run...

DEBUG:launchpad:Aggregation '[{'$match': {'$or': [{'spec._fworker': {'$exists': False}}, {'spec._fworker': None}, {'spec._fworker': 'Stampede2_skx-normal'}], 'spec._category': {'$exists': False}, 'state': {'$in': ['RUNNING', 'RESERVED']}}}, {'$project': {'fw_id': True, '_id': False}}]'.

INFO:rocket.launcher:Sleeping for 60 secs

INFO:rocket.launcher:Checking for FWs to run...

DEBUG:launchpad:Aggregation '[{'$match': {'$or': [{'spec._fworker': {'$exists': False}}, {'spec._fworker': None}, {'spec._fworker': 'Stampede2_skx-normal'}], 'spec._category': {'$exists': False}, 'state': {'$in': ['RUNNING', 'RESERVED']}}}, {'$project': {'fw_id': True, '_id': False}}]'.

INFO:rocket.launcher:Sleeping for 60 secs

INFO:rocket.launcher:Checking for FWs to run...

As you can see, the problem starts because of a malformed vasprun.xml, which then cannot be read or validated.

The following is the FW_submit.script

#!/bin/bash -l

#SBATCH --nodes=1

#SBATCH --ntasks-per-node=20

#SBATCH --time=48:00:00

#SBATCH --partition=normal

#SBATCH --account=TG-MAT210016

#SBATCH --job-name=FW_job

#SBATCH --output=FW_job-%j.out

#SBATCH --error=FW_job-%j.error

#SBATCH --mail-type=START,END

#SBATCH [email protected]

conda activate atomate_env2; module load intel/17.0.4; module load impi/17.0.3; module load vasp/6.3.0

cd /scratch/09341/jamesgil/atomate_test/elasticRegPrintVASPcmd

rlaunch -c /home1/09341/jamesgil/atomate/config rapidfire --max_loops 3

conda deactivate; module purge

# CommonAdapter (SLURM) completed writing Template

I submit my workflows using:

qlaunch singleshot

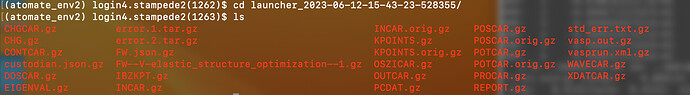

If enter the resulting launcher folder created, it looks as follows:

error.1.tar.gz contains a completed vasp run set to ISMEAR = -5. error.2.tar.gz contains a partially-complete vasp run with ISMEAR = 2 and SIGMA = 0.2. This calculation appears to stop apruptly in the middle of it, presumably because LargeSigmaHandler steps in and adjusts the SIGMA value. Finally, the calculation that is encoded in the main folder is that with ISMEAR = 2 and SIGMA = 0.14. The vasp run appears to complete sucessfully (based on the OUTCAR and vasp.out), but the structure optimization step still shows as a fizzled firework. There is nothing printed to the std_err.txt file here, nor any indication of the vasprunXMLValidator error in the vasp.out or OUTCAR.

I appreciate the patience of whoever reads this ungodly post - I’m sorry its so long. I want to give as much context and information as possible, and if I can supply any additional information that may help in troubleshooting, I’m happy to pass it along.

Does anyone have any ideas of how I should move forward?